Ep. 32 - Awakening from the Meaning Crisis - RR in the Brain, Insight, and Consciousness

(Sectioning and transcripts made by MeaningCrisis.co)

A Kind Donation

Transcript

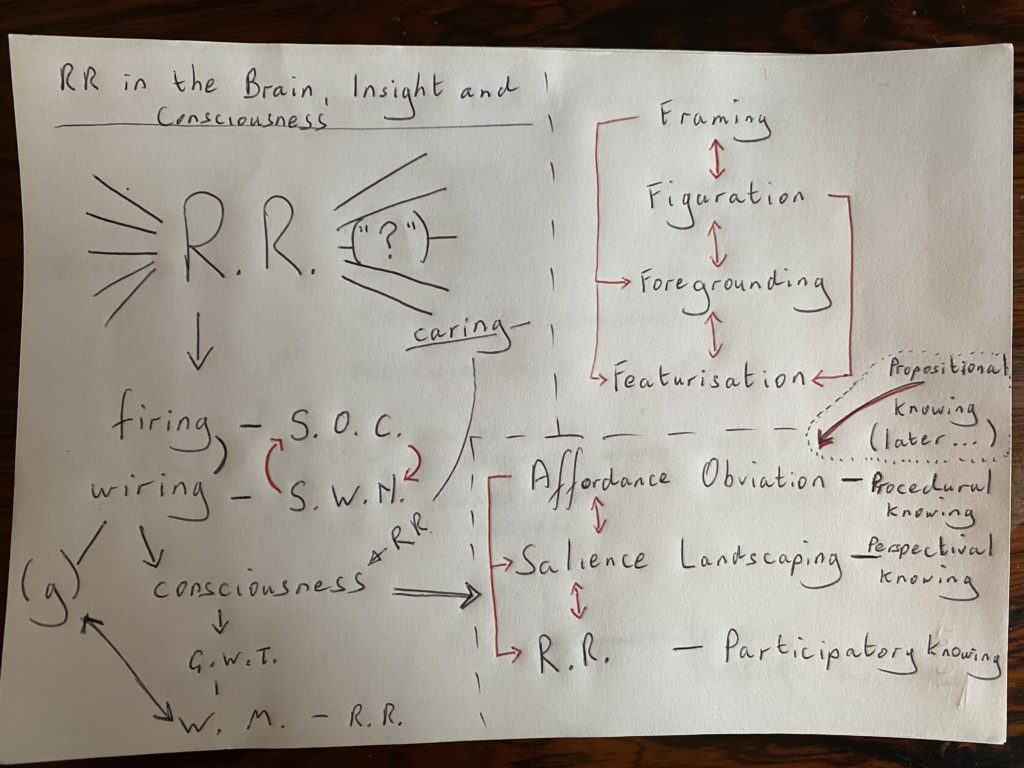

Welcome back to Awakening from the Meaning Crisis. So last time we were taking a look at the centrality of Relevance Realization, [at] how many central processes - central to our intelligence [and] possibly also to, at least the functionality of, our consciousness - presuppose, require [and] are dependent upon Relevance Realization. So we had gotten to a point where we saw how many things fed into this (convergent diagram drawn on the board, into RR), and then I made the argument that it is probably, at some fundamental level, a unified phenomenon because it comports well with the phenomenon of general intelligence, which is a very robust and reliable finding about human beings. And then I proposed to you that what we need to do is two things: we need to try and give a naturalistic account of this (RR), and then show, if we have naturalised this, can we then use it in an elegant manner to explain a lot of the central features of human spirituality. And I already indicated in the last lecture how some of that was already being strongly suggested; we got an account of self-transcendence that comes out of dynamic emergence that is being created by the ongoing complexification. And this has to do with the very nature of a Relevance Realization as this ongoing, evolving fittedness of your sensory-motor loop to its environment, under the virtual engineering of bioeconomic logistical constraints of efficiency that tends to compress and integrate and assimilate, and resiliency that tends to a particularise and differentiate. And when those are happening in such a dynamically coupled and integrated fashion within an ongoing opponent processing, then you get complexification that produces self-transcendence. But, of course, much more is needed.

Now I would like to proceed to address…/ Now I can't do this comprehensively (indicates RR in the convergence diagram on the board), not in a way that would satisfy everybody who is potentially watching this. This is very difficult because there are aspects of this argument that would get incredibly technical. Also, to make the argument comprehensive is beyond what I think I have time to do here today. [-] I’ll put notes for things that you can read or point you to, if you want to read it more deeply. What I want to do is try to give an exemplary argument, an argument of an example of how you could try and bring about a plausible naturalistic account of Relevance Realization. Now we've gone a long way towards doing that because we've already got this worked out in terms of information processing processes. But could we see them potentially realized in the brain? And, one more time, I want to advertise for the brain! I understand why people want to resist the urge of sort of a simplistic reduction that human beings are nothing but their brain. That's a very bad way of talking! That's like saying a table is nothing but it's atoms, that doesn't ultimately make any sense. It's also the structural functional organization of the atoms, the way those, that structural functional organization, interacts with the world; how it unfolds through time. So, simplistic reductionism should definitely be questioned. On the other hand, we also have to appreciate how incredibly complex, dynamic, self-creating, plastic, capable of [-] very significant qualitative development the brain actually is.

So, I proposed to you that [-] one aspect of Relevance Realization, the aspect that has to do with trading between being able to generalise and specialised, as I've argued, is a system going through compression - remember that [is] something like what you're doing with a line of best fit - and particularization - when your function is more tightly fitted to the contextually specific dataset. (Writes compression and particularisation on the board with a vertical, double ended arrow between them.) And, again, this (compression) gives you efficiency, this (particularisation) gives you resiliency. This (compression) tends to integrate and assimilate. This (particularisation) tends to differentiate and accommodate. Okay, so try to keep that all in mind. Now, what I want to try and do is argue that there is suggestive - it's by no means definitive - and I want it clearly understood that I am not proposing to prove anything here; that's not my endeavour. My endeavour is to show that there is suggestive evidence for something. And all I need is that that makes it plausible that there will be a way to empirically explain Relevance Realization.

So, let's talk about what this looks like. So there's increasing evidence that when neurones fire in synchrony together (writes => synchrony off compression), they're doing something like compression. So if you give, for example, somebody a picture that they can't quite make out and you're looking at how the brain is firing, the areas of the visual cortex for example, if it's a visual picture are firing sort of asynchronously, and then when the person gets the “Ahaaa!”, you get large areas that fire in synchrony together. Interestingly, there's even increasing evidence that when human beings are cooperating in joint attention and activity, their brains are getting into patterns of synchrony. So that opens up the possibility for a very serious account of distributed cognition. I'll come back to that much later. Now what we know what's going on in the cortex - and this is the point that's [-], I think, very important: This is scale in-variant (writes this on the board to the left of particularisation) - what that means is that [at] many levels of analysis, you will see this process happening. Why is that important? Well, if you remember, Relevance Realization has to be something that's happening very locally [and] very globally, it has to be happening pervasively throughout all of your cognitive processing. So the fact that this (compression => synchrony) process I'm describing is also scale in-variant in the brain is suggestive that it can be implementing Relevance Realization.

Now, what happens is (writes => asynchrony off particularisation, with a double ended arrow between synchrony and asynchrony), at many levels of analysis, what you [-] have is this pattern where neurones are firing in synchrony and then they become asynchronous and then they fire in synchrony and then they become asynchronous. And they're doing this in a rapidly oscillating manner. So this is an instance of what's called Self-Organizing Criticality (writes above framework on the board). It’s a particular kind of opponent processing, a particular kind of self-organization. So we're getting more precision in our account of the self-organizing nature, potentially, of Relevance Realization. Okay. So let's talk a little bit about this (Self-organising Criticality) first and then we'll come back to its particular instantiation in the brain.

Self Organising Criticality

So self-organizing criticality - this [originally goes] back to the work of Per Bak. So let's say you have grains of sand falling, like in an hourglass, and initially it's random - well random from our point of view - [as to] where, within a zone, individual grains will end up somewhere in that zone. We don't know where because they'll bounce and all that, but over time what happens, because there's a virtual engine there - friction and gravity, but also the bounce - so the bouncing introduces variation, the friction and [-] the gravity put constraint[s on…] And what happens is the sand grains self-organized (draws a little mound within a circle representing the above mentioned ‘zone’) - there's no little elf that runs in and shapes the sand into a mound! it self-organises into a mound like that. And it keeps doing this and keeps doing this (draws progressively bigger mounds)… Now at some point it enters a critical phase. Criticality means the system is close to, is potentially breaking down. See, when it's self-organized like this (in a mound), it demonstrates a high degree of order. Order means that as this mound takes shape the position of any one grain of sand gives me a lot of information about where the other grains are likely to be, because they're so tightly organised, it's highly ordered. But then what happens is that order breaks down and you get an avalanche, it avalanches down and the system… And if and if this is too great, if the criticality becomes too great, the system will collapse. And so there are people that argue that civilizations collapse due to [-] what's called General Systems Failure, which [-] is that these entropic forces are actually overwhelming the structure of the system and the system just collapses. So collapse is a possibility with criticality. However, what can happen is the following: the sand spreads out due to the avalanche. And then that introduces variation, important changes in the structural functional organization of the sand mound, because now what happens is [that] there is a bigger base. And what that means is now a new mound forms (draws a bigger mound on a bigger base/‘zone’), and it can go much higher than the previous mound; it has an emergent capacity that didn't exist in the previous system. And then it cycles like this, it's cycles like this. Now at any point - again, there’s no telos to this - at any point it can just, the criticality can overwhelm the system and it can collapse at any point. The criticality within you can overwhelm the system and you can die.

But what you see is [-] the brain cycling in this manner: Self-organizing Criticality. The neurones structure together - that's like the mound [of sand] forming - and then they go asynchronous. This is sometimes even called the neural avalanche. And then they reconfigure into a new synchrony and then they go asynchronous. So do you see what's happening here? What's happening here is the brain is oscillating like this (sand diagrams) and what it's doing with self-organizing criticality is it's doing data compression and then it does a neural avalanche, which opens up, introduces variation into the system, which allows a new structure to reconfigure that is momentarily fitted to the situation. It breaks up… Now, do you see what it's doing? It's constantly, moment by moment - this is happening in milliseconds! - it's evolving it's fittedness, it's complexifying it's structural functional organization. It is doing compression and particularization, which means it's constantly, moment by moment, evolving it's sensory motor fittedness to the environment. It's doing Relevance Realization, I would argue.

Now, what does that mean? Well, one thing that we should be careful of: when I'm doing this, again, I'm using words and gestures [-] of course, to convey and make sense, but what you have to understand is [that] this is happening at myriad of levels! There’s this self-organizing criticality doing this fittedness at this level and it's interacting with another one doing it at this level all the way up to the whole brain, all the way down to individual sets of neurones. So this is a highly recursive, highly complex, very dynamic evolving fittedness. And I would argue that that is thereby implementing Relevance Realization. There is some evidence to support this. So Thatcher et al. did some important work in 2008, 2009 pointing towards this. So here's the argument I'm making: I'm making the argument that RR can be implemented - it's not completely identical to, because you remember there's also exploration and exploitation - but it can be implemented by this (writes RR - SOC). And I've also, last time, made the argument that Relevance Realization is you're general intelligence (g) (writes (g)-RR-SOC). If this is correct, then we should see measurable relationships between these two ((g) and SOC). Of course we've known how to measure this (g) psychometrically for a very long time. And now we're getting ways of measuring this (SOC) in the brain. And what Thatcher found was exactly that. They found - Thatcher et al. - found that there's a strong relationship between measures of self organization and how intelligent you are. Specifically, what they found was the more flexibility there is in this (synchrony <=> a-synchrony), the more intelligent you are; the more it demonstrates a kind of dynamic ‘evolve-ability’, the more intelligent you are.

Is this a conclusive thing? No, there's lots of controversy around this and I don't want to misrepresent this. However, I would point out that there was a very good article by Hess and Gross in 2014, doing a comprehensive review of the application of Self Organizing Criticality as a fundamental property of neural systems. And they, I think, made a very good case that [-] it's highly plausible that self-organizing criticality is functional in the brain in a fundamental way and that lines up [with], it's convergent with, this (indicates the board). So what we've got is the possibility, I mean… and this carries with it… so I'm hesitant here because I don't want to… by drawing out the implications I don't want to there by say that this has been proven. I'm not saying that. But, so - remember the if - but if this is right, this has important implications. It says that we may be able to move from psychometric measures of intelligence to direct measures in the brain - much more, in that sense, objective measures. Secondly, if this is on the right track, it will feed - remember, a lot of these ideas were derived from sort of emerging features of artificial intelligence - if this is right, it may help then feed back into this and help develop artificial intelligence. So there's a lot of potential here, unfortunately for both good or ill! I'm hoping - if you'll allow me a brief aside - I'm hoping, by this project that I'm engaged in, to link as much as I can and the people that I work with can - you know, my lab and my colleagues - link this emergent, scientific understanding, very tightly to the spiritual project of addressing the meaning crisis rather than letting it just run rampant, Willy nilly. Alright, so if you'll allow me, that's (convergent framework on the board) a way in which we could give a naturalistic account of RR in terms of how neurones are firing, [their] firing patterns.

Networks: From Neurones To Graph/Network Theory

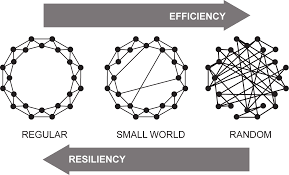

Now I need another scale in-variant thing, but I need it to deal with not how neurones are firing, but how they're wiring - what kinds of networks they're forming. I'm not particularly happy with the wiring metaphor, but it has become pervasive in our culture and it's mnemonically useful because firing and wiring rhyme together. So again, there is a sort of a new way of thinking about how we can look at networks, it's called Graph Theory or Network Theory. It's gotten very complex in a very short amount of time. So I want to do just [-] the core, basic idea with you, that there's three kinds of networks.

All right (Drawing empty network points (nodes) on the board (3x6)), so this is [-] Neutral, this doesn't mean just networks in the brain. It can be networks like how the internet is a network. It could mean how an airline is a network or a railway system, et cetera. This analysis, this theoretical machinery is applicable to all kinds of networks, which is part of its power.

So you want to talk about nodes; these are things that are connected and then you have connections. So these - I'm drawing two connections here, this isn't a single thick one (connecting two nodes with two connections in the first group of 6 nodes), these are two individual ones. Okay. Two individual connections here (completes first network). So that's the same number of connections and nodes in each network (completes the other two networks differently). (Shows included diagram onscreen.) So there are three kinds of networks. This is called a Regular Network (1st). It's regular because all of the connections are short distance connections. Okay. And you'll notice that there's a lot of redundancy in this network. Everything is double connected. Okay. This is called a Random or Chaotic Network (2nd). It's a mixture of short and long connections. And then this is called a Small World Network (3rd). This comes from the Disney song, “It's A Small World After All”, because this was originally discovered by Milgrom when he was studying patterns of social connectedness. And it's a small world after all.

Mutual Reinforcement Of Self Organising Criticality And Small World Networks

Now, originally people were just talking about these (indicates the three diagrams). It's now understood that these are [-] names for broad families of different kinds of networks that can be analysed into many different subspecies, and I won't get into that detail because I'm just trying to make an overarching core argument. So, remember I said that this [Regular] network has a lot of redundancy in it? [-] That's really important because that means that this network is terrifically resilient. I can do a lot of damage to this network and no node gets isolated, nothing falls out of communication. It’s tremendously resilient, very resilient. But you pay a price for that, all that redundancy; this is actually a very inefficient network. Now your brain might tricky because that looks so well ordered. It looks like a nice clean room, and clean rooms look like they're really highly ordered and that's, “Oh, this must be the most efficient, because cleanliness is orderliness and orderliness is efficient” and you can't let that mislead you! You actually measure how efficient a network is by calculating what's called its Mean Path Distance. I calculate the number of steps [-] between all the pairs. So how many [-] steps do I have to go through to get from here to here. One, two… how many do I have to go [through] to go from here to here? One, two, three, four. I do that for all the pairs, and then I get an average of it. And the Mean Path Distance measures how efficient your network is at basically communicating information. These (Regular Networks) have a very, very high Mean Path Distance. So they're very inefficient. You pay a price for all that redundancy and that's of course because redundancy and efficiency are in a tradeoff relationship.

Now this (indicates Random Network diagram) - and again here's where [-] your brain is going to like [explode] - this is so messy, right? This is so messy. Well, it turns out that this is actually efficient. It's actually very efficient because it has so many long distance connections. It's very, very efficient. It has a very low Mean Path Distance. But, because they're in a tradeoff relationship, it's not resilient. [It’s] very poor in resiliency. So notice what we're getting here. These networks are being constrained in their functionality by the trade off in the bio economics of efficiency and resiliency. Markus Brede has sort of mathematical proofs about this in his work on network configuration. Now what about this one - the Small World Network? Well, it's more efficient than the Regular Network, but less efficient than the Random Network. But it's more resilient than the Random network, but less resilient than the Regular Network. But you know what it is? It's Optimal. It gets the optimal amount of both. It optimizes for efficiency and resiliency. It optimizes for efficiency and resiliency. Now that's interesting because that would mean that if your brain is doing Relevance Realization by trading between efficiency and resiliency, it's going to tend to generate small world networks. And not only that, the small world networks are going to be associated with the highest functionality in your brain. And there's increasing evidence that this is in fact the case. In fact, there was research done by Langer at al in 2012 that did the same thing, similar thing, to which Thatcher did.

So here we got this, again: RR is G ( (g) - RR ) and it looks like RR might be implementing - this is what I'm putting here, Small World Networks (SWN) - (writes this at the top of the board: (g) - RR - SWN). And what Langer et al. found is a relationship between these ( (g) and SWN ): the more your brain is wired like this, the better your intelligence. Again, is this conclusive? No, still controversial. That's precisely why it's cutting edge. However, increasingly we're finding that these kinds of patterns of organization make sense. Remember Markus Brede was doing work looking at just artificial networks, neural networks, and you want to optimise between these. So you're getting design arguments out of artificial intelligence [and] you're starting to get these arguments emerging out of neuroscience. Interestingly Langer et al. did his second experiment in 2013, when you sort of put extra effort, task demands on working memory, you see that working memory becomes even more organised, like a small world network. Hilger at al. in 2016 found that there was a specific kind of small world network having to do with efficient hubs. Their thing is entitled “Efficient hubs in the intelligent brain, nodal efficiency of hub regions in the salience network are correlated with general intelligence”. So what seems to be going on is suggestive, not conclusive, but you know, you've got the Langer work, working memory goes more like this. And then you've got this very sophisticated kind, a species of this in recent research, correlated with the salient network in the brain. Do you see that? That as your brain is moving to a specific species of this (Small World Network), within the Salience Network you become more intelligent. And the salience network is precisely that network by which things are salient to you, stand out for you, grab your attention.

One more time, is this conclusive? No. I'm presenting to you stuff that's literally happening in the last two or three years, and as there should be, there's tremendous controversy in science. However, this is what I'm pretty confident of. That that controversy is progressive. It's ongoing. It's getting better and better such that it is plausible that we will be able to increasingly explain, and it will be increasingly convergent with the ongoing progress in artificial intelligence, that we will be able to increasingly explain Relevance Realization in terms of the firing and the wiring. Remember, the firing is Self-Organizing Criticality (Firing - SOC), and the wiring is Small World Networks (Wiring - SWN). And here's something else that's really suggestive: The more a system fires this way (SOC), the more it wires this way (SWN). So the system is firing in a self organizing, critical fashion; It will tend to network as a small world network. The more it wires this way (SWN), the more it is wired like it's a small world network, the more likely it will tend to fire in this pattern (SOC).

These two things mutually reinforce each other's development. So remember - let's try to put this all together! I want you to really [try], I mean it's hard to grasp this, I get this, but remember - this (SOC) is happening at a scale in-variant, massively recursive, complex, self-organizing fashion. This (SWN) is also happening, scale in-variant, at a very complex, self-organizing, recursive fashion. And the two are deeply interpenetrating and affording and affecting each other in ways that have to do directly with engineering the evolving fittedness of your salience realization and your Relevance Realization within your sensory-motor interaction with the world. This is, I think, strongly suggestive that [-] this (RR) is going to be given a completely naturalistic explanation. Okay, notice what I'm doing here! I’m giving a structural, a theoretical structural functional organization ( Firing - SOC <=> Wiring - SWN) for how this (RR) can operate. So [-] the last couple of times we had the strong convergence argument to this (RR). We have a naturalistic account (above looping framework) of this, at least the rational promise that this is going to be forthcoming. And then we're getting an idea of how we can get a structural functional organization of this (RR) in terms of firing and wiring machinery.

Possible Naturalistic Accounts of General Intelligence And Consciousness

Now this is again, like I said, this is both very exciting and potentially scary because it does carry with it the real potential to give a natural explanation of the fundamental guts of our intelligence. I want to go a little bit further and suggest that not only may this help to give us a naturalistic account of general intelligence, it may point towards a naturalistic account, at least of the functionality, but perhaps also of some of the phenomenology of consciousness (writes ->(g) and ->consciousness, both off the Firing <-> Wiring framework above). This again is even more controversial. But again, my endeavour here is not to convince you that this is the final account or theory. It's to make plausible of the possibility of a naturalistic explanation.

Okay. So let's remember a couple of things. There's a deep relationship between consciousness - remember the global workspace theory (GWT), the functionality, and that that overlaps a lot with working memory (WM) (both written off Consciousness). [-] And we already know that there are important overlaps in the [-] brain areas that have to do with general intelligence working memory (connects (g) <=> WM), attention, salience, and also that measures of this ((g)) and measures of the functionality of this (WM) are highly correlated with each other. That's now pretty well established. We've also got that, we know from Lynn Hasher’s work, that this (WM) is doing Relevance Realization. Do you remember [I] also gave you the argument when we talked about the functionality of consciousness, that many of the best accounts of the function of consciousness is that it's doing Relevance Realization. And so this (framework below Firing/Wiring loop) should all hang together. This should all hang together, such that the machinery of intelligence and the functionality of consciousness should be deeply integrated together in terms of Relevance Realization.

We do know that there seems to be some important relationships between consciousness and self organizing criticality (Consciousness - SOC). This has to do with the work of Cosmelliet at al. and others ongoing. Their work was in 2004. So they did what's called the Binocular Rivalry Experiment. Basically you present two images to somebody and they're positioned in such a way that they are going to the different visual fields and they compete with each other because of their design. And then, so what happens in people's visual experiences - let's say it's a triangle and a cross - [is] that, what they'll have experientially is “I’m seeing a cross. Oh, no, I'm seeing a triangle! I'm seeing the cross and I'm seeing a triangle!!” and don't forget that that's not obscure to you. So, you know, the Necker cube, right? (Draws a cube.) When you watch the Necker cube, it flips, right? So this can be the front and it's going back this way, where you can flip and you can see it the other way [-] where this is the front and it goes that way, right? So you are constantly flipping between these, and you can't see them both at the same time. So that's what Binocular Rivalry is. And so what you have though, is you do this [under] more controlled [conditions]. You present it to different visual fields, so different areas of the brain… And so what you can see is what happens when the person is seeing the triangle? Well, one part of the brain goes into synchrony and then as soon as the triangle [goes], that goes asynchronous and the other part of the brain that's picking up on the crosses [goes into synchrony], because that's a different area of the brain because it’s more basic [-]. And what you can see is, as the person flips back and forth in experience, different areas of the brain are going into synchrony or asynchrony. So that is suggestive of a relationship between consciousness and self-organizing criticality. Again, [only] suggestive. But, we’ve already got independent evidence, a lot of convergent evidence, that the functionality of consciousness is to do Relevance Realization, which explains it's strong correlation via working memory with measures of general intelligence. And so, we know that this (Consciousness <-RR (on the board)) is plausibly associated with self organizing criticality.

Back To The Machinery Of Insight

So again, convincing? No. Suggestively convergent? Yes. There's another set of experiments done by Monti et al in 2013, and what you're basically doing is you're giving people a general anaesthetic and then you're observing their brain as they pass out of consciousness and back into consciousness. And what did they find? They found that as the brain passes out of consciousness, It loses its overall structure as a Small World Network and breaks down into more local networks and that as it returns into consciousness, it goes into a Small World of Network formation again, so that consciousness seems to be strongly associated with the degree to which the brain is wiring as a Small World Network. Now I want to try and bring these together in a more concrete instance where you can see the intelligence, the consciousness, and this dynamic process of self organization, all at work.

I want to bring it back to the machinery of insight, the machinery of insight. So if you remember, we talked about this, because we talked about the use of disruptive strategies. And we talked about the work of Stephen and Dixon. Do you remember that what they found was they found a way, a very sophisticated way, but nevertheless a very reliable way, of measuring how much entropy is in people's processing when they're trying to solve the insight problem. Remember they were tracing through the gear figures? And what they found is that entropy goes up right before the insight and then it drops and the [behavior] becomes even more organised (draws a bell curve that drops lower on the right). Now, that's plausibly - and they suggested - that's plausibly an instance of self organizing criticality; that what's happening is (works through the bell curve) you're getting the neural avalanche, it's breaking up, and then that allows a restructuring, which goes with the restructuring of the problem - remember, so you're breaking frame with the neural avalanche and then you're making frame like the new mound - as you restructure you're problem framing. And you get the insight! And you get a solution to your problem. [So, this is linking insight to SOC, very clearly.]

[Now, interestingly enough, Schilling has a mathematical] model from 2005 linking insight to Small World Networks. She argues quite persuasively that what’s - this is very interesting, since what you can see happening in an insight is that people's information is initially organised, like in a regular network! Just think about that intuitively, like so my information is sort of integrated here, right (draws a little circle of dots)? Local organization, a regular network. Local organization. Right (draws a second little circle of dots)? So the whole thing is, right, all I've got is a regular network. But what can happen is here's my regular network (draws bigger, simple, six node diagram) and what happens is [with] one of these I get a long distance connection that forms (each node is connected to the ones beside it with double connections, except for one long one across the middle). So my regular network suddenly is altered into a Small World Network, which means I lose some resiliency, I lose some resiliency, but I gained a massive spike in efficiency. I suddenly get more powerful. So insight is when a Regular Network is being converted into a Small World Network, because that means this is a process of optimisation, (Regular Network => SWN). Because remember, this (SWN) is more optimal than this (Regular Network). And you can see that in how people's information is organised in an insight. They take two domains; here's - think about how metaphor affords insight - you take two domains, “Sam is a pig” and you suddenly [get] this connection between [them] and those two Regular Networks are now coalesced into a Small World Network.

Okay, that's great! So in some of the work I've done with other people I've been suggesting because of this (SOC <=> SWN), the following: That what happens in insight - a la Stephen and Dixon - is you get Self-Organized Criticality, and that Self-Organizing Criticality breaks up a Regular Network and converts it into a Small World Network. So what you're getting is a sudden enhancement, increased optimisation of your Relevance Realization - and what's it accompanied with? It's accompanied with a flash in salience! Remember? And then that could be extended in the flow experience. You're getting an alteration of consciousness, an alteration of your intelligence. An optimisation of your fittedness to the problem space. Okay. (Wipes board clean except for the expanded convergence framework) Again, I'm going to say this again, right? I'm trying to give you stuff that makes this plausible. I'm sure that in specifics, it's going to turn out to be false because that's how science works, but that's not what I need right now. What I tried to show you is how progressive the project is (indicates the framework on the board), of naturalising this and how so much is converging towards it, that it is plausible that this will be something that we can scientifically explain. And more than scientifically explain, that we'll be able to create as we create autonomous Artificial General Intelligence.

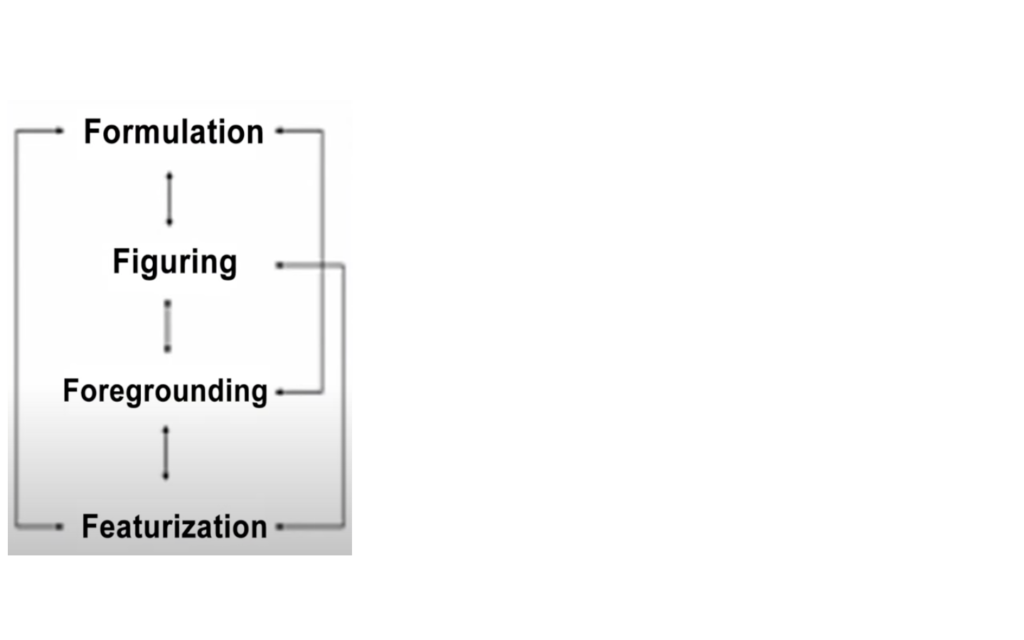

RR, Consciousness And Our Salience Landscape

Okay. Let’s return back. If I've at least made it plausible that there's a deep connection between Relevance Realization and Consciousness, I want to try and point out some aspects to you about Relevance Realization and why it is creating a tremendously textured, dynamically flowing, salience landscape. So remember how Relevance Realization is happening at multiple interacting levels. So we can think about this where (writes featurization on the board) you're just getting features that are getting picked up. Remember the multiple object tracking (taps a few objects around). [-] ‘This’, ‘this’, ‘this’ (individually holds up some pens), so basic salience assignment, right? And [-] this is based on work originally from Matson in 1976, his book on salience. I’ve mentioned that before, and then some work that I did with Jeff Marshman and Steve Pearce?. And then later work that I did with Anderson Todd and Richard Wu. The featurization is also feeding up into foregrounding and feeding back, right? (Writes foregrounding above featurization with a double ended arrow between.) So a bunch of ‘this’, ‘this’, ‘this’, all these features and then presumably I'm foregrounded and other stuff is backgrounded. This (foregrounding) then feeds up into figuration (double arrowed above foregrounding). You're configuring me together and figuring me out - think of that language, right? - so that I have a structural functional organization. I'm aspectualized for you.

That's feeding back (figuration <=> foregrounding). And of course there's feedback down to here (figuration <=> featurization). And then that of course feeds back to [-] framing how you're framing your problems (framing/formulation double arrowed above figuration). And we've talked a lot about that and that feeds back [to featurization). So you've got this happening and it's giving you this very dynamic and textured salience landscape.

And then you have to think about how that's the core machinery of your Perspectival Knowing. Notice what I'm suggesting to you here: you've got the Relevance Realization that is the core machinery of your Participatory Knowing - it's how you are getting coupled to the world so that co-evolution, reciprocal realization can occur. That's your Participatory Knowing (RR - Participatory Knowing). This (RR) feeds up to/feeds back to your Salience Landscaping (RR <=> Salience Landscaping). This is your Perspectival Knowing (Salience Landscaping - Perspectival Knowing). This is what gives you your dynamic, situational awareness. Your dynamic, situational awareness; this textured, Salience Landscaping (gestures the four levelled diagram above). This of course (Salience Landscaping) is going to - and we'll talk more about that - it’s going to open up an affordance landscape for you. Certain connections, affordances are going to become obvious to you. And you say, “Oh man, does anybody… like, this is so abstract!” But this is how people are trying to wrestle with this now! Here's an article from Frontiers In Human Neuroscience: “Self-Organizing Free Energy Minimization…” - that's Kristen's work, and it has to do with, ultimately, [-] getting your processing as efficient as possible, …”And Optimal Grip On A Field Of Affordances” using all of this language that I am using with you right now. That's by Bundaberg and Rhett Velde from 2014 “Frontiers In Human Neuroscience”. Just as one example among many!

So this (Salience Landscaping) is feeding up and what it's basically giving you is Affordance Obviation (Salience Landscaping <=> Affordance Obviation). Certain affordances are being selected and made obvious to you. That of course is going to be the basis of your Procedural Knowing, knowing how to interact (Affordance Obviation - Procedural Knowing). And I think there might be a way in which that more directly interacts here (Affordance Obviation —> Salience Landscaping & —> RR), maybe through kinds of implicit learning, but I'm not going to go into that. We'll come back later on to how Propositional Knowing relates to all of this ((Affordance Obviation + <— Propositional Knowing)). I'm putting it aside because this (RR <-> Salience Landscaping <-> Affordance Obviation) is where we do most of our talking about consciousness. With this (Salience Landscaping), I think, at the core, the Perspectival Knowing. But it's the Perspectival Knowing that's grounded in our Participatory Knowing, and it's our Perspectival Knowing…/ look: your situational awareness that obviates affordances is what you need in order to train your skill. That's how you train your skills. And we know that consciousness is about doing this higher-order Relevance Realization because that's what this is (framework on the board) - this is higher order Relevance Realization that affords you solving your problems.

Three Dimensions of Salience Landscaping

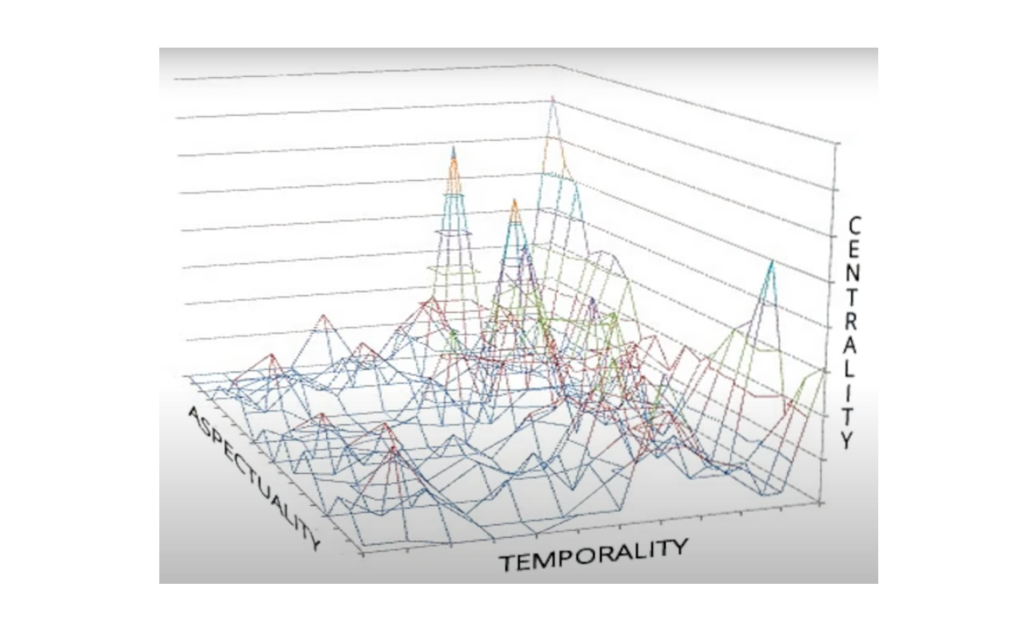

So this is… I mean, I'm trying to say, I need all of this (gestures to include both the above frameworks) when I'm talking about your Salience Landscaping. I'm talking about it as the nexus between your Relevance Realization [&] Participatory Knowing and your Affordance Obviation [&] Procedural Knowing - your skill development - Perspectival Knowing at the core, and that what's happening in here is this (indicates the Featurization framework). If that's the case, then you can think of your Salience Landscape as having at least three dimensions to it. (Draws X, Y, Z axis on the board as it is also shown, in detail (although with differently labelled axes), on-screen.)

So one is pretty obvious to you, which is the aspectuality (X axis). As I said, your salience landscape is aspectualizing things. Things (picks up a marker pen…) so the features are being foregrounded and configured and they're being framed. So this is a marker. It is aspectualized. Remember? Whenever I'm representing or categorizing it, I'm not capturing all of its properties, I’m just capturing an aspect. So this is aspectualized - everything is aspectualized for me.

There's another dimension here of centrality (Y axis). I'll come back to this later, but this has to do with the way Relevance Realization works. Relevance Realization is ultimately grounded in how things are relevant to you, right? Literally, literally, how they are important to you. You “import” how they are connotative…/ At some level, the sensory-motor stuff is to get stuff to you [that] you literally need to import materially, and then, at a higher level, you literally need to import information to be constitutive of your cognition. We'll come back to that transition later. But what you have is the Perspectival Knowing is there's ‘doing aspectualized’, and then everything is centred. It's not non-valanced, it’s vectored on to me. And then it has temporality (Z axis) because this is a dynamic process of ongoing evolution. Timing, small differences in time, make huge impacts, huge differences in such dynamical processing. Kairos is really, really central. When you're intervening in these very com[plex], massively recursive, dynamically coupled systems, small variations can unexpectedly have major changes. So things have a central relevance in terms of their timing, not just their place in time. So think of your Salience Landscape as an unfolding, like in these three dimensions of Aspectuality, Centrality and Temporality.

There's an acronym here: ACT. This is an enACTed kind of Perspectival Knowing. All right, so you've got a consciousness and what it's doing for me, functionally, is all of this (indicates expanded convergence framework of RR), but what it's doing, in that functionality, is all of this (both other frameworks - Featurization + & RR-Participatory Knowing +). And what that's giving me is Perspectival Knowing (gestures up) that's grounded in Participatory Knowing (gestures down) that affords Procedural Training (gestures middle ground) and that it has Aspectuality, a Salience Landscape that has Aspectuality, Centrality and Temporality.

It has… look at what it has: Centrality is the ‘hereness’ (adds hereness to centrality on X, Y, Z) -my consciousness is ‘here’, because it is indexed on me. Of course it has ‘nowness’ because timing is central to it (adds nowness to Temporality on X, Y, Z). Now, that was intended, that move (gestures to hereness and nowness having been added to the graph). And it has ‘togetherness’ (adds togetherness to aspectuality on X, Y, Z), unity, how everything fits together - I don't want to say unity because unity makes it sound like there's a single thing - but how there's a ‘oneness’ to your consciousness; it’s all together. You have the hereness, the nowness, the togetherness (indicates the X, Y, Z graph), the salience, the Perspectival Knowing (indicates RR - Perspectival + framework), how it is centred on you… A lot of the phenomenology of your consciousness is explained along with the functionality of your consciousness. Is that a complete account? No, but it's a lot of what your consciousness does and is. It's a lot of what your consciousness does and is.

Getting Ready To Complete RR Convergent Framework (*?*)

I would argue that at least what that gives us is an account that we are going to need for the right hand of the diagram. Why altering States of consciousness can have such a profound effect on your reaching down to your identity (RR - Participatory Knowing), up into your agency (Affordance Obviation - Procedural Knowing). Why it could be linked to things like a profound sense of insight. We've talked about this before, when we talked about higher States of consciousness. How it can feel like a dramatic coupling to your environment (tapping RR - Participatory Knowing); that's that participatory coupling that we found in flow. This all, I think, hangs together extremely well, which means it looks like I have the machinery I need to talk about that right hand [part] of the [convergence] diagram (*?*).

Before I do that, I want to make a couple of important points to remind you of things. Relevance Realization is not cold calculation. It is always about how your body is making risky, affect laden choices of what to do with its precious, but limited cognitive and metabolic and temporal resources. Relevance Realization is deeply, deeply, always - and think about how this (X, Y, Z graph) also connects to this and to consciousness - it's always, always, an aspect of caring (adds caring to the expanded RR convergence framework). That's what Read Montague argues - the neuroscientist - in his book “Your Brain Is Almost Perfect”. That what makes us fundamentally different from computers, because we are in the finitary predicament, is we are caring about our information processing and caring about the information processed therein. So this is always affect. It's things are salient! They're catching your attention! They're arousing, they're changing your level of arousal - remember how arousal is an ongoing, evolving part of this? And they are constantly creating affect, motivation, moving, emotion, moving you towards action. You have to hear how at the guts of consciousness [and] intelligen[ce], there is also caring. That's very important. That's very important because that brings back, I think, a central notion, and I know many of you are wondering why I haven't spoke about him yet, but I'm going to speak about him later - from Heidegger that at the core of our being in the world (indicates RR - Participatory Knowing +) is a foundational kind of caring. And this connection I'm making, this is not farfetched!

Look at somebody [who was] deeply influenced by Heidegger, who was central to the third generation, or 4E Cognitive science! That's the work of Dreyfus and others, and Dreyfus has had a lot of important history in reminding us that our Knowing is not just Propositional Knowing, it's also Procedural and, ultimately I think, Perspectival and Participatory - he doesn't quite use that language, but he points towards it. He talks a lot about Optimal Gripping and, importantly, if you take a look at his work “Being In The World” on Heidegger, when he's talking about things like caring, he's invoking, in central passages, the notion of relevance. Relevance. And when he talked about what computers can't do and later on what computers still can't do, what they're basically lacking is this Heideggerian machinery of caring, which he explicates in being in the world in terms of the ability to find things relevant. And this of course points again towards Heidegger's notion of Dasein; that our being in the world - to use my language - is inherently transjective. Because all of this machinery is inherently transjective. And it is something that we do not make. We, and our intelligible world co emerged from it. We participate in it, and I want to take a look more at what that means for our spirituality (gestures that this belongs in the empty part of the convergent diagram (*?*)) next time.

Thank you very much for your time and attention.

END

Episode 32 - Notes

To keep this site running, we are an Amazon Associate where we earn from qualifying purchases

Per Bak - Self Organising Criticality

Per Bak was a Danish theoretical physicist who coauthored the 1987 academic paper that coined the term "self-organized criticality."

Self Organized Criticality

Self-organized criticality is a property of dynamical systems that have a critical point as an attractor.

Thatcher et al.

Scholarly articles for Thatcher et al.

Hess and Gross in 2014

Scholarly articles for Hess and Gross et al 2014

Langer at Al in 2012

Scholarly articles for Langer et Al in 2012

Hilger et al. in 2016 “Efficient hubs in the intelligent brain, nodal efficiency of hub regions in the salience network are correlated with general intelligence”

Scholarly articles for Hilger et al. in 2016

Lynn Hasher

Lynn Hasher is a cognitive scientist known for research on attention, working memory, and inhibitory control. Hasher is Professor Emerita in the Psychology Department at the University of Toronto and Senior Scientist at the Rotman Research Institute at Baycrest Centre for Geriatric Care.

Cosmelliet et al - Binocular Rivalry Experiment - 2004

Manipulative approaches to human brain dynamics

Monti et al - experiments in 2013

Scholarly articles for Monti et al - experiments in 2013

Stephan and Dixon - Disruptive Strategies

The Architecture of Cognition: Rethinking Fodor and Pylyshyns Systematicity Challenge - Buy Here

Matson on salience 1976

"And then this is based on work originally from Matson in 1976, his book on salience"

Scholarly articles for Matson on salience 1976

Here's an article from Frontiers In Human Neuroscience: “Self-Organizing Free Energy Minimization And Optimal Grip On A Field Of Affordances” by Bundaberg and Rhett Velde from 2014

Read Montague argues - the neuroscientist - in his book “Your Brain Is Almost Perfect” - Buy Here

Heidegger

Martin Heidegger was a German philosopher who is widely regarded as one of the most important philosophers of the 20th century. He is best known for contributions to phenomenology, hermeneutics, and existentialism

Dreyfus

“Being In The World” on Heidegger - Buy Here

Heidegger uses the expression Dasein to refer to the experience of being that is peculiar to human beings. Thus it is a form of being that is aware of and must confront such issues as personhood, mortality and the dilemma or paradox of living in relationship with other humans while being ultimately alone with oneself.

Other helpful resources about this episode:

Notes on Bevry

Additional Notes on Bevry