Ep. 31 - Awakening from the Meaning Crisis - Embodied-Embedded RR as Dynamical-Developmental GI

(Sectioning and transcripts made by MeaningCrisis.co)

A Kind Donation

Click here to buy for $14.99

Transcript

Welcome back to awakening from the meaning crisis. This is episode 31. So last time we were taking a look at trying to progress in an attempt to give at least a plausible suggestion, a scientific theory, of how we could explain relevance realization. And one of the things we examined was the distinction between a theory of relevance and a theory of relevance realization. And I made the argument that we cannot have a scientific theory of relevance precisely because of a lack of systematic import, but we can have a theory of relevant realization. And then I gave you the analogy of that, which I'm building towards something stronger than an analogy of Darwin's theory of evolution by natural selection and that in which Darwin proposed a virtual engine that regulates the reproductive cycle so that the system constantly evolves the biological fitedness of organisms to a constantly changing environment. And then the analogy is [that] there is a virtual engine in the embodied brain - and why it’s an embodied, embedded brain will become clear in this lecture - but there is a virtual engine that regulates the sensory motor loop so that my cognitive interactional fittedness is constantly being shaped. It's constantly evolving to deal with a constantly changing environment. And what I in fact need, as I argued, is a system of constraints because I'm trying to, between selective and enabling constraints, to limit and zero in on relevant information.

And then I was trying to argue that the way in which that operates - we saw that [-] needs to be sort of related to an auto poetic system - and then the way that operates the self organization, I suggested, operates in terms of a design that you see in many scales - and we need, remember, a multi-scaleular theory in terms of your biological and cognitive organization. And that's in terms of Opponent Processing. And we took a look at the opponent processing within the autonomic nervous system that is constantly, by this strong analogy, evolving your level of arousal to the environment; opposing goals, but inter-related function. Then I proposed to you that we could look for the kinds of properties that we're going to be talking about, the level at which we're going to be pitching a theory of relevance realization, which is the theory of bioeconomic properties that are operating, not according to normativity of truth or validity, not logical normativity, but logistical normativity. And the two most important logistical norms, I would propose to you, are efficiency and resiliency.

And then I made an argument that they would be susceptible to opponent processing precisely because they are in a tradeoff relationship with each other, and that if we could get a cognitive virtual engine that regulates the sensory-motor loop by systematically playing off selective constraints on efficiency - selective logistical economic constraints on efficiency - and enabling economic constraints on resiliency, then we could give an explanation, a theory deeply analogous to Darwin's theory of the evolution across individuals of biological fittedness, we could give an account of the cognitive evolution within individuals cognition of their cognitive interactional fittedness; the way they are shaping the problem space so as to adaptively be well-fitted to achieving their interactional goals with the environment.

Relevance Detection VS Relevance Projection And Resisting Both

Before I move on to try and make that more specific and make some suggestions of how this might be realized in the neural machinery of brains, I want to point out why I keep emphasising this embodied embedded. And I want to say a little bit more about this, because I also want to return to something I promised to return to: why I want to resist both an empiricist notion of relevance detection and a romantic notion of relevance projection.

So the first thing is, why am I saying “embodied”? Because what I've been trying to argue is [that] there is a deep dependency, a deep connection - and the dependency runs from propositional down through to participatory - but there is a deep dependency, between your cognitive agency as an intelligent general problem solver, and the fact that your brain exists within a bio economy. The body is not Cartesian clay that we drag around and shape according to the whims or desires of our totally self-enclosed, for Descartes, immaterial minds.

The body is not a useless appendage. It is not just a vehicle. So even here I'm criticising certain platonic models! The body is an auto-poetic bio-economy that makes your cognition possible. Without an auto-poetic bio-economy you do not have the machinery necessary for the ongoing evolution of relevance realization. The body is constitutive of your cognitive agency in a profound way. Why “embedded”. And this will also lead us into the rejection of both an empiricist and a romantic interpretation. Why “embedded”? The biological fittedness of a creature is not a property of the creature per se. It is a real relation between the creature and its environment. Is a great white shark intrinsically adapted? No! That makes no sense to ask that question, because if I take this supposedly apex predator, really adopted, and put it in the Sahara desert, it dies within minutes, right? It's adaptivity is not a property intrinsic to it per se. It's adaptivity is not something that it detects in the environment! It's adaptivity is a real relation, an affordance, between it and the environment. In a similar way, while I would argue that relevance is not a property in the object, it is not a property of the subjectivity of my mind. It is neither a property of objectivity nor a property of subjectivity. It is precisely a property that is co-created by how the environment and the embodied brain are fitted together in a dynamic evolving fashion. It is very much like the bottle being graspable (picks up his water bottle to demonstrate); this is not a property of the bottle nor a property of my hand, but a real relation, a real relation, on how they can be fitted together, function together. So I would argue [that] we should not see relevance as something that we subjectively project, as the romantic claims. We should not see relevance as something we merely detect from the objectivity of objects, as perhaps we might if we had an empiricist bent.

I want to propose a term to you. I want to argue that relevance is in this sense, transjective (writes this on the board). It is a real relationship between the organism and its environment. We should not think of it as being projected. We should not think of it as being detected. This is why I've consistently used the term… We should think of relevance as being realized, because the point about the term realization is it has two aspects to it. And I'm trying to triangulate from those two aspects. What do I mean by that? There is an objective sense to realization (writes Objective Realization below and to the right of transjective), which is to “make real”, and if that's not an objective thing, [then] I don't know what counts! “Making real”, that's objective! But of course there is a subjective sense to realization (writes Subjective Realization below and to the left of transjective), which is “coming into awareness”, and I'm using both these words, I'm using both these senses of the same word (connects both realisation’s with a line (base of a triangle)) - I'm not equivocating - I am trying to triangulate to the transjectiveity of relevance realization (completes the triangle). That is why I'm talking about something that is both embodied, necessarily so, and embedded, necessarily so! Notice how non-, or perhaps better, anti-Cartesian this is! The connection between mind - if what you mean by ‘mind’ is your capacity for consciousness and cognition - and body is one of dependence, of constitutive need. Your mind needs your body. We're also talking, not only about it being embodied [and] embedded, it is inherently a [transactional] relation of relevance realization. The world and the organism are co-creating co-determining co-evolving the fittedness.

All right, let's now return to it: the proposal. Now… before, we return, notice what this is telling us. This is telling us that a lot of the grammar by which we try to talk about ourselves and our relationship to reality - the subjective, objective… both of these are reifying, and they are inherence claims! They are the idea that relevance is a thing that has an essence that inheres in the subject or relevance is a thing that has an essence that inheres in the object. Both of those, that standard grammar and the adversarial, partisan debates we often have, I am arguing need to be transcended (indicates ‘transjective’), need to be transcended. And I would then propose to you that that's going to have a fundamental impact on how we interpret spirituality, if again, by spirituality, we mean a sense, and a functional sense, of connectedness that affords wisdom, self-transcendence, et cetera.

Efficiency And Resiliency

So back to the idea of efficiency, resiliency, tradeoffs. I would point you to the work of Marcus Brede and he's got work sort of mathematically showing that when you're creating networks, especially neural networks, you're going to optimise - and we talked about optimisation in the previous video - you're going to optimise between efficiency and resiliency. That's how you're going to get them to function the best you can. Now what I want to try and do is try to show you the relationship, the poles of the transjectivity and how that's going to come out, or at least point towards the generative relationship that can be discussed in terms of these polls.

So I argued that, initially, the machinery of relevance realization has to be internal. Now again, this is why I just did what I did: when I say “internal”, I don't mean to subjective. I don't mean inside the introspective space of the mind. When I'm talking about “the goals are internal” I mean internal to an embodied, embedded, brain/body system; an auto-poetic system of adaptivity. In fact, there [are] many people who are arguing in cognitive science that those two terms are interdependent. Just like I'm arguing that relevance realization is dependent on auto-poesies being an adoptive system and being an auto-poetic system are also interdependent. The system can only be continually self making if it has some capacity to adapt to changes in its environment. And the system is only adoptive if it is trying to maintain itself. And that only makes sense if it has real needs, if it's an auto-poetic thing. So these things are actually deeply interlocked: Relevance Realization, auto-poesies, and adaptivity (writes out another triangle with the three of these).

So, as Markus Brede has argued and other people, and I'm giving you independent argument, you want to get a way of optimising between efficiency and resilience. You don't want… remember with the autonomic nervous system, this doesn't mean getting some average or stable mean, it means the system can move. Sometimes giving more emphasis to efficiency, sometimes giving more emphasis to resiliency; just like your autonomic nervous system is constantly evolving, constantly recalibrating your level of arousal.

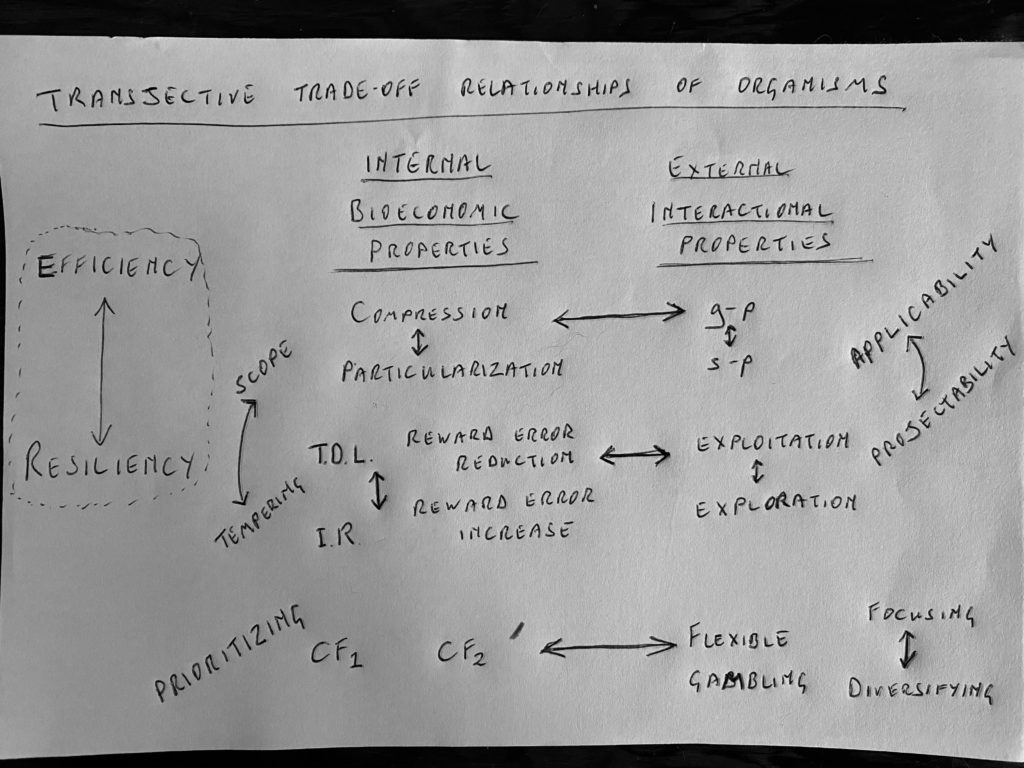

Now, what I want to do is pick up on how those constraints might cash out. In particular [-] how these logistical norms, understood as constraints, can be realized in particular virtual engines (writes efficiency above resiliency on the left side of the board with a double ended arrow joining them). And I want to do this by talking about Internal Bioeconomic Properties (writes this on the board, to the right of efficiency) and then, for lack of a better way for this contrast - and again, this does not map onto the subjectivity objectivity! I don't have to keep saying that, correct (looks at audience - stern face!!)? Okay? External Interactional Properties (writes this on the board, to the right of Internal Bioeconomic Properties). By external I mean that these eventually are going to give rise to goals in the world as opposed to constituent of goals in the system. And what I want to do is show you how you go back and forth. Now it'll make sense to do this in terms of reverse engineering, because it will just help to make more sense, because I'm starting from what you understand in yourself, and then working [back]. So often I will start here (External Interactional Properties) and go this way (indicates moving right to left across the board).

So you want to be adaptive. We said, we want to be a general problem solver, and that's important, but notice that that means there's two kinds of, and people don't like when I use this word, but I don't have an alternative word, so I'm just going to use it… There's two kinds of “machines” you can be. What I mean by that is a system that is capable of solving problems and pursuing goals in some fashion. If I want to be adaptive, what [-] kind of machine do I want to be? Well, I might want to be a general purpose machine (writes general purpose below External Interactional Properties). Now these terms are always, and I keep showing you that, are always relative; they're comparative terms and relative. I don't think anything is absolutely general or absolutely special purpose. It's always a comparative term.

Let me give you an example. My hand is a general purpose machine, right? My hand is such that it can be used in many, many different contexts for many, many different tasks. So it's very general purpose. Now, the problem with being a Jack of all trades is that you are master of none. So the problem with my hand being general purpose is that for specific tasks, it can be out competed by a special purpose machine. So, although this is a good general purpose machine, it is nowhere as good as a hammer for driving in a nail. Nowhere as good as a screwdriver for removing a screw, et cetera, et cetera. So, in some contexts, special purpose machines, outperform general purpose machines, but you wouldn't want the following: you wouldn't want [if, for example,] you're going to be stranded on a desert Island, like maybe Tom Hanks in Castaway, and he lost all of his special purpose tools. They sink to the bottom of the ocean, that causes him a lot of distress! Literally what he starts with at first is his hands, the general purpose machines! And the pr[oblem], and you see that, “Wow, they're not doing very good! If I just had a good knife!” But the problem is you wouldn't want well, not Tom Hanks, but his character, I forget the character's name. I think it was Jack. You wouldn't have Jack, “Jack, I'm going to cut off your hands and I can attach a knife here and a hammer here and now you have a hammer, a knife!” It's like, “no, no, no, I don't want that either. I don't want just a motley collection of special purpose machines”

So sometimes you're adaptive by being a general purpose machine. Sometimes times you're adoptive by being a special purpose machine (writes Special Purpose beside General Purpose, below External Interactional Properties). So general purpose machine, you use the same thing over and over again. Sometimes we make a joke about somebody using a special purpose machine as a general purpose machine, right? “When all you have is a hammer, everything looks like a nail”, right? And the joke there is, and it strikes us as a joke because we know that hammers are special purpose things and everything isn’t a nail! It's not so much a joke if I say, “sometimes when all you have is a hand, everything looks graspable!” That's not so weird. So what am I trying to get you to see? What I'm trying to get you to see is you want to be able to move between these (general and special purpose). This (general purpose) is very efficient. Why? Because I'm using the same thing over and over again, the same function over and over again, or at least the same set of tightly bound functions. The thing about a special purpose is I don't use it that often. I use my hammer sometimes and my saw sometimes and my screwdriver sometimes and I have to carry around the toolbox. Now the problem with that is it gets very inefficient because a lot of the times I'm carrying my hammer around and I'm not using it. So I have to bear the cost of carrying it around and I'm not using it. So it's very inefficient, but you know what it makes me? It makes me tremendously resilient because when there's a lot of new things, unexpected, specific issues that my general purpose thing can’t handle, I'm ready for them! I have resiliency. I've got differences within my toolbox kit that allow me to deal with these special circumstances.

So notice what I want to do. I want to constantly trade between them (draws a double ended arrow between general and special purpose). I want to constantly trade between them. Now, what I'm going to do - I did that to show you this (points at External Interactional Properties, then wipes general and special purpose off the board). I'm then now going to reorganise it this way… because what I’m going to show you, what I'm arguing, is general purpose is more efficient, special purpose is making you more resilient (now writes general purpose (gp) ABOVE special purpose (sp) with a double ended arrow between them, just like efficiency and resiliency on the left side of the board) and you want to trade between them. Okay, so those are interactional properties, and you said, “so I sort of get the analogy. What does that have to do with the brain and bio economy?” So how would you try to make information processing more efficient? Well, what I want to do is I want to try and make the process I'm using, the functions I'm using, to be as generalisable as possible. That will get me general purpose, because I can use the same function in many places. Then I'm very efficient. How do you do that? How do you do that?

Well, here's where I want to pause and I want to introduce just a tiny bit of narrative in here. When I was writing this paper with Tim Lilicrap and Blake Richards, but especially this was Tim's great insight. You've got to get in[to]… If you're interested in cutting edge AI, you really need to pay attention to the work that Tim Lillicrap is doing. Tim’s a former student of mine and his calling, in many ways of course he's greatly surpassed my knowledge and expertise. He's one of the cutting edge people in Artificial Intelligence, and he had a great insight here. I was proposing this model, this theory to him and he said, “but you know, you should reverse engineer it in a certain way!” And I said, “what do you mean?” He says, “well, you're acting as if you're just proposing this top down, but what you should see is that many of the things you're talking about are already being used within the AI community!” So the paper we published was “Relevance Realization and the Emerging Framework in Cognitive Science”, namely that a lot of the strategies [that we] are going to talk about here (indicates Internal Bioeconomic Properties) are strategies that are already being developed.

Now, I'm going to have to talk about this at a very abstract level, because which one of the particular architectures or particular applications is going to turn out to be the right one, we don't know yet! That's still something in progress. But I think Tim's point is very well taken that we shouldn't be talking about this in a vacuum. We should also see that the people who are trying to make Artificial Intelligence are already implementing some of these strategies that I’m going to point out. And I think that's very telling, the fact that we're getting convergent argument that way.

Compression And Particularization

Okay, so how do I make an information processing function, more generalisable? How do I do that? Well, I want to… I mean, you know how we do it now! Because we've talked about it before! But you do it in science. So here's two variables, for example (draws and x y graph), it's not limited to two, right?! And so I have a scatter plot (puts a bunch of dots in the graph) and what they taught you to do was a line of best fit (draws a line up through the dots, bottom left to top right through the dots - a best fit). All right, this is a standard move in Cartesian graphing. Now why do you do a line of best fit? [-] and my line of best fit might actually touch none of my data points. Does that mean I'm being ridiculously irresponsible to the data? [That] I’m just engaging in armchair speculation? No! Why do we do this? Why did we do a line of best fit? Well, why we're doing this is because it allows us to interpolate and extrapolate (extends the line far beyond the data points). It allows us to go beyond the data. Now we're taking a chance and, of course, all good science, and this was the great insight of Popper: “all good science takes good chances!”, right? But here's the thing: I do this so that I can make predictions [of] what the value of Y will be when I have a certain value of X that I've never obtained. I can interpolate and extrapolate. That means I can generalise the function. So this is data compression. This is Data Compression (writes Data Compression above the graph). What I'm trying to do is basically pick up on what's invariant. The idea is that the information always contains noise, and I'm trying to pick up on what's invariant and extend that. And of course that's part and parcel of why we do this because in science, we're trying to do the inductive generalizations, et cetera, et cetera.

So the way in which I make my functionality more general, more general purpose, is if I can do a lot of data compression (writes compression under Internal Bioeconomic Properties inline with gp under external interactional properties, with a double ended arrow between compression and gp)). So if the data compression allows me to generalise my function and that generalisation is feeding through the sensory motor loop in a way that is protecting and promoting my auto-poetic goals, it’s going to be reinforced. But what about the opposite (draws a little double ended arrow down from compression in anticipation…)? What was interesting at the time - I think [-] some people have picked us up on a term - we didn't have a term for this, and I remember there was a whole afternoon where Tim and I were just trying to come up with, “what do we want for the trade off?” So this is making your information processing more efficient (up the little arrow towards Compression), more general purpose (across to gp). What makes it more special purpose, more resilient? And so we came up with the term Particularization writes this below Compression.

And Tim's point - and I'm not going to go into detail here - Tim's point is this is the general strategy that's at work in things like the wake-sleep algorithm that is at the heart of the deep learning promoted by Geoffrey Hinton who was at UFT, and Tim was a very significant student of Jeff's. And so, this is the abstract explanation of how that strategy that’s at work in a lot of the deep learning that's at the core of a lot of successful AI. What particularization is, is I'm trying to keep more in track with the data (draws a squiggly line up through the data points in the graph, overlying the line of best fit). I'm trying to create a function that over fits in some sense to that data. That will get me more specifically in contact with “this” particular situation. So this (compression) tends to emphasise what is invariant. This (particularization) tends to get the system to pick up on more variations. So this (compression) will make the system more cross contextual. It can move across contexts because it can generalise. This (particularization) will tend to make the system more context sensitive.

And of course you don't want to maximize any one of these. You want them dynamically trading and notice how they are - is this the right word? I hope so! - obeying! It sounds so anthropomorphic. Notice how they're obeying the logistical normativity trading between efficiency and resiliency (indicates mutual double ended arrows). And then there's various ways of doing this, right? And there's lots of interesting ways of engineering this into — but it's creating a ‘virtual’ engine — engineering this, creating sets of constraints on this so this will oscillate in the right way and optimise that way (relationships between compression & particularization and gp & sp). And so the idea is when you've got this (compression <—> particularisation) as something that's following the completely internal Bioeconomic logistical norms, it will result in the evolution of sensory motor interaction that is going to make a system or an organism constantly adaptively moving between being general purpose and being special purpose. It will become very adaptive. Now different organisms will be biologically skewed one way or the other, even individuals will be biologically skewed.

Psychopathological Interpretation, Cognitive Scope And Applicability

So there are people now proposing, for example, that we might understand certain psychopathology as in terms of ‘some people are more biased towards overfitting, to particularizing’, and ‘some people are more biased towards compressing and generalising’. These people (compression) tend towards seeing many connections where there aren't connections. And these people (particularisation) tend to be very ‘featurely’ bound. (Wipes graph off the board.)

Okay, what's another one… Oh, so this is “Compression <-> Particularization”. We called [it] “Cognitive Scope” (writes Cognitive Scope beside Compression <-> Particularization), and we called this (gp <-> sp) “applicability” (writes applicability beside gp <-> sp), how much you can apply your function or functions. And the idea is if you can get scope going the right way, it will - and there's no other way of [putting it] - it will attach to, it’ll get coupled to - it's not representing - it will get coupled to this pattern of interaction (indicates gp <-> sp), which will fit you well to the dynamics of change and stability in the environment.

Exploitation VS Exploration

Okay. What's another thing? Well, a lot of people are talking about this (writes “Exploitation <—> Exploration” below External Interactional Properties). You'll see people even talking about this in AI very significantly. Exploitation versus exploration. So here's another trade off. This (Internal Bioeconomic Properties) tends to be in terms of the scope of your information. This (Exploration <—> Exploitation under External Interactional Properties) has to do more with the timing. So here's the question: should I stay here and try and get as much as I can out of here? [-] That's exploiting. Or should I move and try and find new things, new potential sources of resource and reward? They are in a tradeoff relationship, because the longer I stay here, the more opportunity costs I accrue, but the more I move around, the less I can actually draw from the environment. So do I want to maximize either? No, I want to trade between them. I'm always trading between exploiting and exploring. There's different strategies that might be at work here. I've seen recent work in which [-] this (exploitation) is your reward when a system doesn't make an error and then your reward (exploration) when it makes an error. And of course those are in a tradeoff relationship and this sort of makes it more curious (exploration). This makes it more sort of conscientious (exploitation), if I have to speak anthropomorphically!

[-] (on the left, under Internal Bioeconomical Properties) So one way you can do this is you can Reward Error [-] Reduction or Reward Error increase (writes these both on the board, separated by another double ended arrow). The way we talked about in the paper is you can trade off between what's called Temporal Displacement Learning (writes TDL level with Reward Error Reduction) and Inhibition on Return (writes IR level with Reward Error Increase). I won't go into the dynamics there. What I can say is there's different strategies being considered and being implemented. And this is Cognitive Tempering (writes tempering with TDL and IR), having to do with both temper and the relationship between ‘temp’(?) and time. And this has to do with the ‘projectability’ of your processing (writes project ability with exploitation <-> exploration).

Now, first of all, a couple of things: are we claiming that these are exhaustive? No, they are not exhaustive. They are exemplary. They're not exhaustive. They're exemplary of the ways in which you can trade between efficiency and resiliency and create virtual engines that will adapt by setting up systems of constraints, the sensory-motor loop, the interactions (indicates External Interactional Properties) with the environment in evolving manner.

So why is exploitation efficient? Because I don't have to expend very much. I can just stay here. But it depends on things sort of staying the same. Exploration is I have to expend a lot of energy, I have to move around and it's only rewarding if there's significant difference. If I go to B and it's the same as A, you know what I should've done? Stayed at A!! Do you see what's happening? All of these in different ways - this has to do with the applicability, the scope; this has to do with the projectability, the time - but [with] all of these you're trading between [the fact] that sometimes what makes something relevant is how its the same, how it's invariant! Sometimes what makes something relevant is how it's different, how it changes. And you have to constantly shift the balance between those, because that's what reality is doing. That's what reality is doing. (Draws a double ended arrow left to right, joining Exploitation & Exploration and Reward Error Increase/Decrease.)

What's another one? Well, another type of one… I think there are many of these (Internal Bioeconomic Property list), and they are not going to act in an arbitrary fashion because they are all regulated by the tradeoff normativity, the opponent processing, between efficiency and resiliency. Notice, these (compression & particularisation) are both what are called different cost functions; they are dealing again with the bio-economics - how you're dealing with the cost of processing. So playing between the costs and benefits of these, et cetera. But you might also need to play between these (draws two vertical double ended arrows, one between Scope and Tempering on the left and one between Applicability & Projectability on the right). So it's also possible that we have what we called Cognitive Prioritization (written on the board, at the bottom left) in which you have cost functions being played off against each other. [-] So here's a cost function (compression <-> Particularization), here's a cost function (Reward Error Increase <-> RE Decrease) and they’re play[ing]… So cost function one (writes CF1), cost function two (writes CF2), they’re playing off against each other. And you may… and you have to [-] decide here - and this overlaps with what's called Signal Detection Theory, and other things I won't get into… - you have to be very flexible in how you gamble, because you may decide that you will try [to] hedge your bets and activate as many functions as you can. Or you may try to go for the big thing and say, no, I'm going to give[-] a lot of priority to just this function. Of course, you don't want that to maximize, you want flexible gambling (writes Flexible Gambling to the right of CF1 & CF2, with a double arrow between). Sometimes you're focusing. Sometimes you're diversifying. (Writes Focusing on the bottom right, above Diversifying, both next to Flexible Gambling and with an up down double arrow between them). You create a kind of integrated function. All of this can be, and if you check it in the paper, all of this can be represented mathematically. Once again, I am not claiming this is exhaustive. I'm claiming it's exemplary. I think these are important. I think scope and time cost functions and prioritising between cost functions… I think it's very plausible that they are part and parcel of our cognitive processing. (Wipes board, except for efficiency <—> resiliency on the left)

What I want you to think about is - I'm representing this abstractly - think about each one of these, you know, here's scope (X axis of a graph drawn), here's tempering (Y axis), and then of course there is the prioritization that is playing between them (Z axis). I want you to think [-] of this as a [3D] space and these functions [-] are all being regulated in this fashion (circles efficiency <-> resiliency). Relevance Realization is always taking place in this space, and at ‘this’ moment, it's got ‘this’ particular value according to tempering and scope and prioritization, and then it moves to ‘this’ value, and then to ‘this’ value and to ‘this’ value and then to ‘this’ value, and then out to ‘this’ value… It’s moving around in a state space (joins dots throughout the 3D represented space within the graph). That's what it is. That's what's happening when you're doing Relevance Realization. But although [-] I've represented how this is dynamic, I haven't shown you how and why it would be developmental. (Wipes graph off the board.)

Compression And Particularisation, A Deeper Investigation Through To Complexification

I'm going to do this with just one of these, because I could teach an entire course just on Relevance Realization. When you're doing data compression, you're emphasising how you can integrate information - remember, like the line of best fit, you're emphasising Integration, because you're trying to pick up on what's invariant. And of course this is going to be verses Differentiation. (Writes these on the board Compression - Integration <—> Particularization - Differentiation.) I think you can make a very clear argument that these map very well onto the two fundamental processes that are locked in opponent processing that Piaget - one of the founding figures of developmental psychology - said [-] drive development. This is what Piaget called Assimilation (writes this on the board: Compression - Integration - Assimilation). Assimilation is you have a Cognitive Schema - and what is a cognitive schema again? It is a set of constraints - and you have a cognitive schema and what that set of constraints do is it makes you integrate, it makes you treat the new information as the same as what you got, you integrate it, you assimilate it; that's compression. What's the opposite for Piaget? Well, it's Accommodation (writes this on the board: Particularization - Differentiation - Accommodation) (NB: there are now 3 arrows on the board: Compression <-> Particularization; Integration <-> Differentiation; Assimilation <-> Accommodation. All three in line with Efficiency <-> Resiliency). And that's why, of course, when people talk about exploratory emotions, like awe, they invoke accommodation as a Piagetian principle because it opens you up. What does it do? It causes you to change your structure, your schemas. Why do we do this (assimilation)? Well, because it's very efficient. Why do we have to do this (accommodation)? Because if we just pursue efficiency, if we just assimilate, our machinery gets brittle and [distortional]. It has to go through accommodation. It has to introduce variation. It has to rewire and restructure itself so that it can, again, respond to a more complex environment.

So not only is relevance realization inherently dynamic, it is inherently developmental. When a system is self-organizing, there is no deep distinction between its function and its development. It develops by functioning, but by functioning, it develops. When a system is simultaneously integrating and differentiating it is complexifying; Complexification (labels this on the board: Integration <-> Differentiation } Complexification). A system is highly complex if it is both highly differentiated and highly integrated. Now why? But if I'm highly differentiated, I can do many different things (waves his arms about!). But if I do many different things and I’m not highly integrated, I will fly apart as a system. So I need to be both highly differentiated so I can do many different things and highly integrated so I stay together as an integrated system. As systems complexify, they self transcend; they go through qualitative development (writes Self-transcendence off of Complexification).

A Further Biological Analogy

Let me give you an analogy for this. Notice how I keep using biological analogies! That is not a coincidence. You started out life as a zygote, a fertilized cell, a singular cell. The egg and the sperm (gesticulates coming together), zygote. Initially, all that happens is the cells just reproduce, but then something very interesting starts to happen. You get cellular differentiation. Some of the cells start to become lung cells. Some of them start to become eye cells. Some start to become organ cells. But they don't just differentiate. They integrate! They literally Self-ORGANize into a heart organ, an eye… (writes Self-organize on the board, highlighting ‘organ’). You developed through a process, at least biologically, of biological complexification. What does that give you? That gives you emergent abilities (Writes Emergent off of Complexification). You transcend yourself as a system. When I was a zygote, I could not vote. I could not give this lecture. I now have those functions. In fact, when I was a zygote, I couldn't learn what I needed to learn in order to do this lecture. I did not have that qualitative competence. I did not have those functions! But as a system complexifies - notice what I'm showing you: as a system is going through relevance realization, it is also complexifying, it is getting new emergent abilities of how it can interact with the environment and then extend that relevance realization into that emergent self-transcendence. If you're a relevance realising thing, you're inherently dynamical, self-organizing, auto-poetic thing, which means you are an inherently developmental thing, which means you are an inherently self transcending thing. (Wipes board completely clean.)

An Argument For Relevance Realization As A Unified Phenomenon

Now, [I] want to respond to a potential argument that you might have. It's like, “well, I get all of this, but maybe relevance, realizations is a bunch of many different functions!” First of all, I'm not disagreeing with the idea that a lot of our intelligence is carried with heuristics and some of those are more special purpose and some are more general purpose, and we need to learn how to trade off between them. However, I do want to claim that relevance realization is a unified phenomenon. And I'm going to do this in a two part way. The first is to first assert, and then I will later will substantiate that when we're talking about general intelligence - in fact that's what this whole argument has been - we’re talking about relevance realization. Now this goes to work I did with Leo Ferraro, who was a psychometrist, somebody who actually does psychometric testing on people's intelligence. And one of the things we know from Spearman, way back in the twenties, is - and he discovered what's called the General Factor of Intelligence, sometimes called General Intelligence; there's a debate about whether we should identify those or not. I'm not going to get into that right now! What Spearman found was that how kids were doing in math was actually predictive of how they were doing in English and even how they were doing - contrary to what our culture says - how they're doing in sports. [-] How I'm doing in all these different tasks (writes A B C D on the board in a square) was… how I did in A was predictive of how I did and B, and vice versa! (Connects all letters to all other letters with double ended arrows.) This is what's called a Strong Positive Manifold. There's this huge, Inter-predictability between how you do on all these very many different tasks. That is your general intelligence. Many people would argue, and I would agree that this is the capacity that underwrites you being a general problem solver.

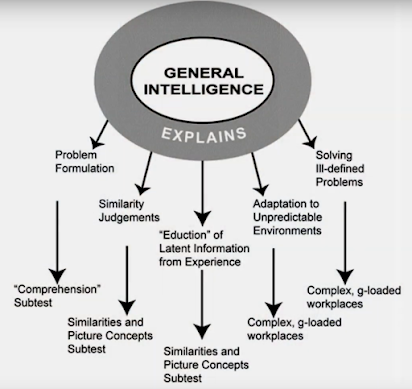

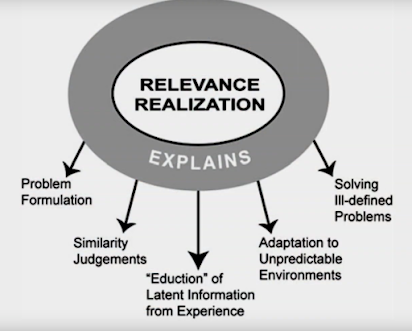

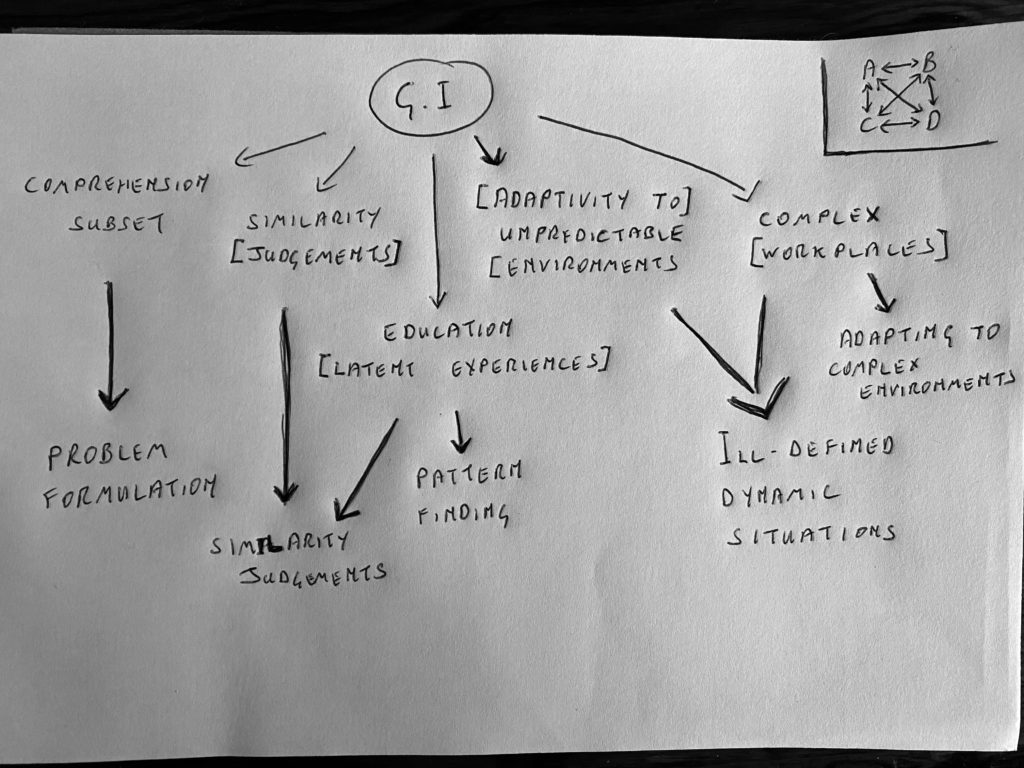

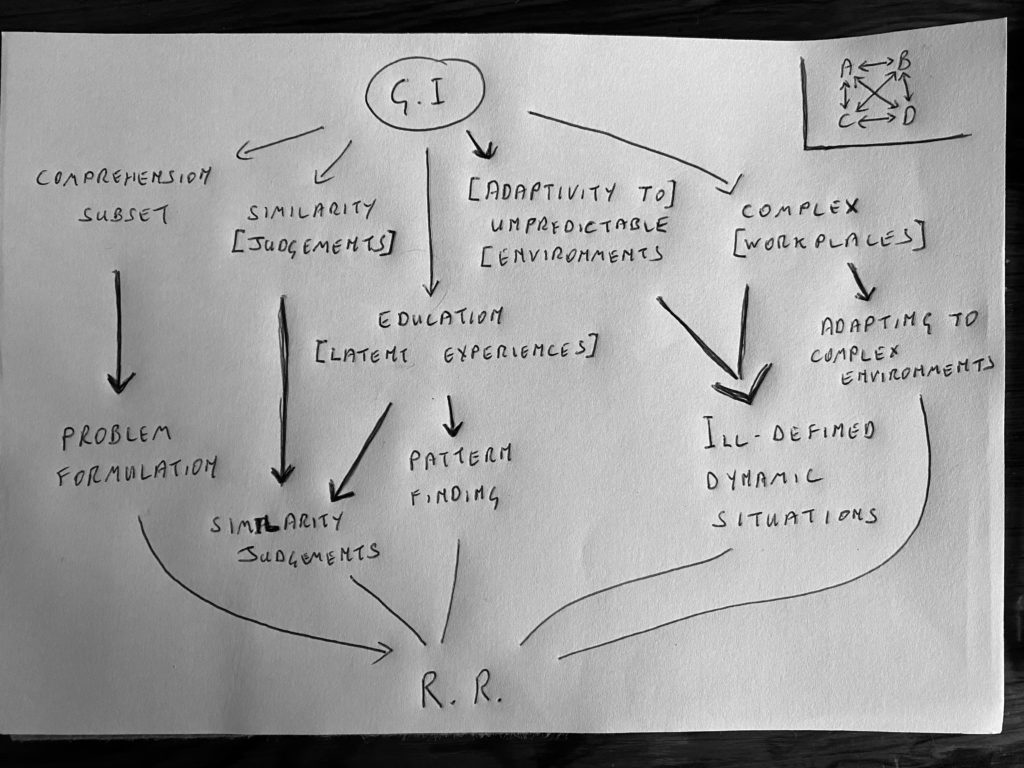

Often when we're testing for intelligence, we're testing, therefore, General Intelligence (circles G.I. on the board). I'll put the panel up as we go along. What Leo showed me - he made a good argument - is that the things we study when [-] we’re doing something like the Wechsler's test, or something like that, a psychometric test… So you will test things like “The Comprehension Subset”.

And of course you'll concentrate on “Similarity Judgments”, right? You'll also do what, right? “The Similarities of pictures”. Okay. Other people have talked about your “Ability to Adapt to Unpredictable Environments”. There's other work by (?) and others… Your “Ability to deal with complex workplaces”, what are called “G-Loaded” that require a lot… (shows these all circled in the diagram onscreen, and writes a few of them off GI on the board).

Now when you trace these back, what this points to is your capacity for “Problem Formulation”, the “Similarity Judgments”, and what are called the “Eduction abilities”, the ability to draw out latent patterns. This of course is similarity judgment. This is a similarity judgment and pattern finding. The complex [workplaces] is basically dealing with very ill defined dynamic situations. [-] and adapting to complex environments. So this is general intelligence.

This is [-] how we test general intelligence. We test people across all these different kinds of tasks and what we find is a Strong Predictive Manifold - there's some general ability behind it. But notice these: problem formulation, similarity, similarity judgments, pattern finding, dealing with ill-defined dynamic situations, adapting to complex environments… That's exactly all the places that I've argued we need relevance realization. (Completes the above diagram by bringing everything together to R.R. at the bottom.)

Relevance Realization, what I would argue, is actually the underlying ability of your general intelligence. That's how we test for it (indicates framework on the board). This is the things that came out. And you can even see comprehension aspects in here, all kinds of things. So relevance realization, I think, is a very good candidate for your general intelligence. Insofar as general intelligence is a unified thing, and we have…/ this is, look, this is one of the most robust findings in psychology (Indicates Spearman / ABCD Manifold). It just keeps happening. There's always debates about it, blah, blah, blah, and people don't like the psychometric measures of intelligence. And I think that's because they're confusing intelligence and relevance and wisdom - we'll come back to that. The thing is, this is a very powerful measure. It's reliable! This is from the 1920s that this keeps getting replicated. This is not going through a replication crisis. And if I had to know one thing about you in order to try and predict you. The one thing that outperforms anything else is knowing this (again, taps Spearman/ABCD Manifold); this will tell me how you do in school, how you do in your relationships, how well you treat your health, how long you're likely to live, whether or not you're going to get a job. This crushes, how well you do in it and an interview in predicting whether or not you'll get and keep a job. Is this the only thing that's predictive of you? No! And I'm going to argue later that intelligence and rationality are not identical. But is this (Spearman/Manifold) a real thing? And is it a unified thing? Yes. And can we make sense of this (G.I. on the board) as relevance realization? Yes. Is relevance realization, therefore, a unified thing? Yes. So relevance realization is your general intelligence and what I'm arguing - well at least that's what I'm arguing - and that your general intelligence can be understood as a dynamic developmental evolution of your sensory-motor fittedness that is regulated by virtual engines that are ultimately regulated by the logistical normativity of the opponent processing between efficiency and resiliency. (Wipes board clean)

So we've already integrated a lot of psychology and the beginnings of biology, with some Neuroscience, [-] and we've definitely integrated with some of the best insights from artificial intelligence. What I want to do next time to finish off this argument is to show how this might be realized in dynamical processes within the brain and how that is lining up with some of our cutting edge ideas. I'm spending so much time on this because this is the linchpin argument of the cognitive science side of the whole series. If I had to show you how everything feeds in to relevance realization (draws a convergent diagram into RR), if I can give you a good scientific explanation of this (RR) in terms of psychology, artificial intelligence, biology, neuroscientific processing, then it is legitimate and plausible to say that I have a naturalistic explanation of that. And if the history is pointing towards this, what we're going to then have the means to do is to argue how this - and we've already seen it, how it's probably embedded in your procedural, perspectival, participatory, knowing, it's embedded into your [transactional] dynamical coupling to the environment and the affordance of the agent arena relationship; the connectivity between mind and body, the connectivity between mind and world. (Represents all of this with the divergent side of the diagram, coming out of RR.) We've seen it’s central to your intelligence, central to [the] functionality of your consciousness. This is going to allow me to explain so much! We've already seen it as affording an account of why you're inherently self transcending.

We'll see that we can use this machinery to come up with an account of the relationship between intelligence, rationality, and wisdom. We will be able to explain so much of what's at the centre of human spirituality. We will have a strong plausibility argument for how we can integrate cognitive science and human spirituality in a way that may help us to powerfully address the meaning crisis.

Thank you very much for your time and attention.

END

Episode 31 - Notes

Markus Brede

Networks that optimize a trade-off between efficiency and dynamical resilience:

Tim Lilicrap and Blake Richards (& John Vervaeke)

“Relevance Realization and the Emerging Framework in Cognitive Science”

Popper

Sir Karl Raimund Popper CH FBA FRS was an Austrian-British philosopher, academic and social commentator. One of the 20th century's most influential philosophers of science, Popper is known for his rejection of the classical inductivist views on the scientific method in favour of empirical falsification

Geoffrey Hinton

Geoffrey Everest Hinton CC FRS FRSC is a British-Canadian cognitive psychologist and computer scientist, most noted for his work on artificial neural networks. Since 2013 he divides his time working for Google and the University of Toronto.

-wake-sleep algorithm that is at the heart of the deep learning promoted by Geoffrey Hinton

The wake-sleep algorithm is an unsupervised learning algorithm for a stochastic multilayer neural network. The algorithm adjusts the parameters so as to produce a good density estimator. There are two learning phases, the “wake” phase and the “sleep” phase, which are performed alternately.

Piaget - one of the founding figures of developmental psychology

Jean Piaget was a Swiss psychologist known for his work on child development. Piaget's 1936 theory of cognitive development and epistemological view are together called "genetic epistemology". Piaget placed great importance on the education of children.

Spearman

Charles Edward Spearman, FRS was an English psychologist known for work in statistics, as a pioneer of factor analysis, and for Spearman's rank correlation coefficient& General Factor of Intelligence

Wechsler's test

The Wechsler Adult Intelligence Scale (WAIS) is an IQ test designed to measure intelligence and cognitive ability in adults and older adolescents. The original WAIS (Form I) was published in February 1955 by David Wechsler, as a revision of the Wechsler–Bellevue Intelligence Scale, released in 1939.

Other helpful resources about this episode:

Notes on Bevry

Additional Notes on Bevry

A Kind Donation

Click here to buy for $14.99