Ep. 41 - Awakening from the Meaning Crisis - What is Rationality?

(Sectioning and transcripts made by MeaningCrisis.co)

A Kind Donation

Transcript

Welcome back to Awakening from the Meaning Crisis.

So we are pursuing the cognitive science of wisdom because wisdom has always been associated with meaning from the axial revolution onward, so that's a deep reason. Wisdom, of course, is also important for the cultivation of enlightenment, the response to the perennial problems. It's also playing a central role in being able to interpret our scientific worldview in a way that allows us to respond to the historical forces. And so wisdom is very important.

We took a look at—we continued to look at McKee and Barber and we saw their convergence argument that at the core of wisdom is the systematic seeing through of illusion and into what's real. And this is very much like as the child is to the adult, the adult is to the sage. And then two other important aspects of it: that wisdom is much more with how you know than what you know, which means how you come to know it, and also how you interpret the knowledge. And that wisdom is, therefore, in a related fashion, deeply perspectival and participatory. And that's why wisdom can be associated with important forms of pragmatic self-contradiction. We then noted the connection with overcoming self-deception in a systematic fashion, and the emphasis on wisdom on the process rather than the products of knowing.

And that both of those took us into the work of Stanovich. And because he famously argues that one of the hallmarks of rationality is valuing the process rather than—sorry, not rather than—rathering the process in addition to valuing the products of our cognition. And that took us also into the notion, the discussion of rationality and Stanovich is a good bridge because for him, the notions of rationality and ameliorating foolishness overlap very strongly. And we got into this notion of, at which I've been sort of comprehensively arguing throughout this course, that rationality has to do with the reliable and systematic overcoming of self-deception. And the potential affording of flourishing by some process of optimization of achieving our goals, with the caveat that as we try to optimize, we often change the goals that we are pursuing. One reason being that we come to more and more appreciate the value of the process as opposed to just the end result of the process.

So in order to pursue that and to deepen our notion of rationality and thereby deepen our notion of wisdom—and, of course, wisdom has been associated with rationality from the beginning: Socrates and Plato and Aristotle, right?—we took a look at the rationality debate. We saw—I gave you three examples of many possible examples of experimental results that seem to show reliably this kind of thing. Very reliably, no replication crisis on this material.

So, very reliably, two things: that people acquiesce, they acknowledge and accept the authority of certain standards, principles of how they should reason. And yet they reliably failed to meet those standards. And so one way to—one possible interpretation of that, not the only interpretation—one possible interpretation is that most people are irrational in nature. As I pointed out, because rationality is existential and not just, sort of, abstract, theoretical, concluding that people are irrational, has important implications for their moral status, their political status, their legal status, even their developmental status. Okay? So this is what I keep meaning when I'm saying rationality is deeply existential, it is not just theoretical.

Okay. We took a look at one—the beginning of what's called the rationality debate and good science always has good debate in it. The rationality debate and the argument was made by Cohen, that human beings can't be comprehensively irrational because we have to ask this very pertinent question: where do the standards come from? And the argument is the standards have to come from us. And how do we come up with that normative theory?

We come up with that normative theory that acknowledges, the way we come up with our normative theory is consonant with that we're the source of our standards. This is the—and this is the idea that at the level of my competence, my competence contains all the standards. And that what I do in order to get my normative theory is I idealize away all my performance errors. And this takes time. It takes a lot of reflection, until I get at the underlying competence. And then what I'm doing when I'm proposing a normative theory is I'm basically taking—I'm giving people this now explicated, excavated account of the competence that they possess and then demanding from them that they do their best to reduce the performance errors and meet that competence.

So we—at the level of our competence, we are fundamentally rational and all of the mistakes that people are making in these experiments, according to Cohen are just performance errors. They're just performance errors. Okay. And just like you do not think I've lost English because of all of my performance errors, you basically dismiss them and read through them and attribute to me the underlying competence, Cohen is arguing, we should read through and dismiss these experimental results. And because the argument shows that people must have the underlying competence.

Competence Performance Distinctions

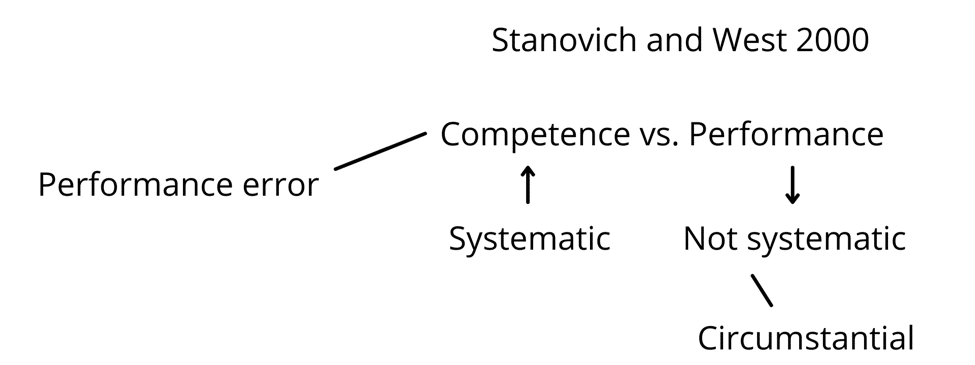

Okay. So how does Stanovich reply to this? Well, in many places, but I think the best is Stanovich and West 2000 (writes Stanovich and West 2000) in behavioral and brain sciences. Because the argument—this is a really, for me, a gold standard of how you do really good cognitive science, the way they integrate a philosophical, psychological argumentation together, for example, is really, really impressive. So Stanovich and West say, well, if Cohen is right, then all of the errors that people are making are performance errors (Fig. 1a) (writes Performance errors). And Cohen is invoking the competence performance distinction, (writes Competence vs. Performance beside Performance Error) which, of course, goes back through, you know, to Chomsky and, ultimately to, Piaget.

Now let's remember Piaget 'cause we've talked about this. Well, how do you distinguish the child's speech deficits from the drunkards, right? The drunkard is giving performance error, but we think the child, actually, her competence, for example, is not sufficiently developed. Why do we think that? Why do we think when the children are making all these conservation errors, right, that that reflects something about their competence at that point? Well that's because competence—errors that reflect a defect or a deficit in competence (draws an arrow pointing up to Competence) are systematic errors (writes Systematic below Competence). That's precisely how Piaget did his work. Right? That's precisely how you would determine that if I got brain damage and I'm leaving out, right, my sentences are broken, that it is not performance error, because my errors would be systematic across different contexts, different times of day different tasks. I'd be making these mistakes.

Performance errors are not systematic, right? (writes Not systematic below Performance) So if my speech is broken because I'm rushing, right, it's not going to be broken when I'm not rushing. If my speech is broken, when I'm tired, it's not going to be broken when I'm not tired. So this is circumstantially driven. (writes Circumstantial below Not systematic) As the circumstances change, the patterns of error will change and go all over the place. So these errors are not systematic in nature.

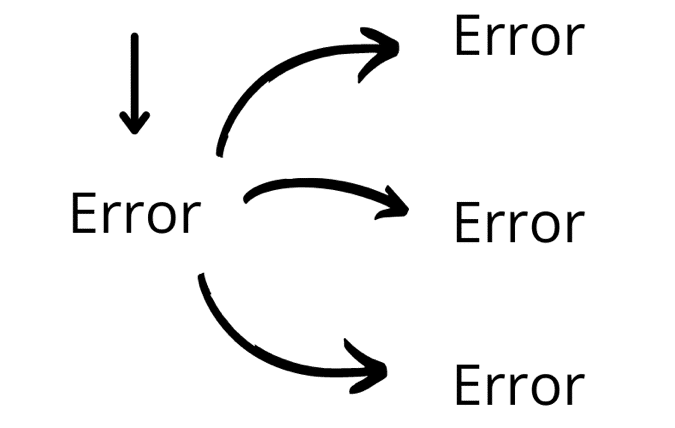

Okay, well, that means we have a reliable way of telling whether or not the errors that the people are making in the experiments are performance errors (encloses Performance error in a box) or not. How do you see if errors are systematic? Well, this is what you do. Errors are systematic (writes Error) if when I make this error (Fig. 2) (draws an arrow pointing to Error), it's highly predictive that I'll make this error (draws an arrow away from Error and writes Error). It's highly predictive that I make this error (draws another arrow pointing away from Error and writes Error at the end of the third arrow). It's highly predictive that I'll make this error (draws another arrow pointing away from Error and writes Error at the end of the fourth arrow). So if a child is showing conservation in this task, that's predictive that they'll fail to show conservation in this task, in this task and this task, right? So the degree to which—we've talked about this before—the degree to which there's a positive manifold. Remember when we talked about general intelligence, the degree to which your performance in one task is predictive of how you'll do on other tasks. If you have a manifold in your performance, if there's—if the errors are systematic across many different tasks that you're performing, then that's evidence that the errors are systematic (indicates Systematic) rather than not systematic (indicates Not systematic).

Okay, so that's easy enough. So then what we can do is we can—and we've got all this data—we can look at people doing these different experiments. And in many experiments they're doing multiple versions, the same participant is doing one task or [another]. So what we can do is the following. We can see if—if I, for example, if I make a failure in critical detachment, does that mean that I'll also tend to show belief perseverance? Does that also mean that I'll attempt to leap to the wrong conclusion when I was doing the task on, you know, the lilypads covering the pond? And the answer—and this is what there is overwhelming evidence for, is yes. The errors you make are systematic. They're systematic.

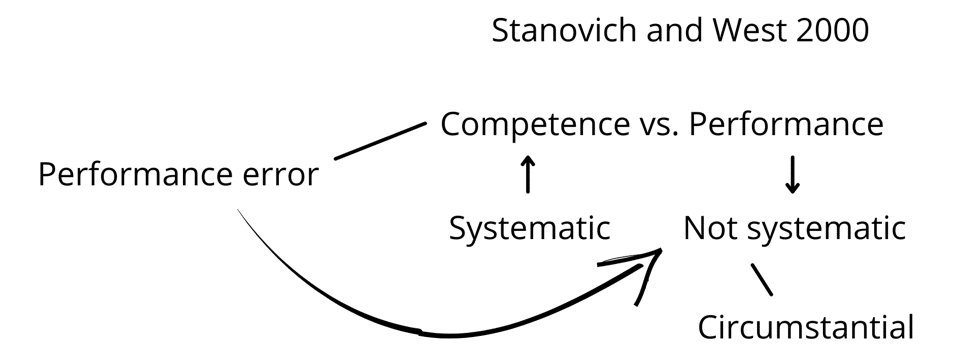

And see. So now Stanovich and West say, ah, Cohen's argument predicts that the errors are performance errors (indicates Performance error). That's what he claims. That means that the errors should be not systematic (Fig. 1b) (draws an arrow from Performance error to Not systematic). But what we find is overwhelming convincing evidence (encircles Systematic) that the errors that people are making in all these tasks are systematically related together. So these are errors at the level of competence.

And now you go, "What?" And this is what I mean about good debate—makes something problematic, right? Because there's something right about Cohen's argument and Stanovich acknowledges it, right? There's something right in that we have to be the source, right, of our standards. And yet Cohen's conclusion that all the errors are performance errors is wrong. It's it's undeniably wrong. How do we put these together?

Well, you put these together and you can see Stanovich and West doing this to varying degrees. You put this together by stepping back and looking at an assumption that's in Cohen's argument, it's an assumption about the competence. (writes Competence) Cohen is assuming that that competence for rationality (Fig. 3) (writes Single beside Competence) is a single competence. He's assuming that that competence is static, (writes Static beside Competence) right? He's also assuming that the competence is completely individualistic. (writes Individualistic below Static) Okay? I'll come back to this one (indicates Individualistic) much later, I'm going to address these two (draws arrows pointing to Single and Static) because Stanovich really doesn't talk about this. (indicates Individualistic) So remember that, but we'll come back to it later. (erases Individualistic)

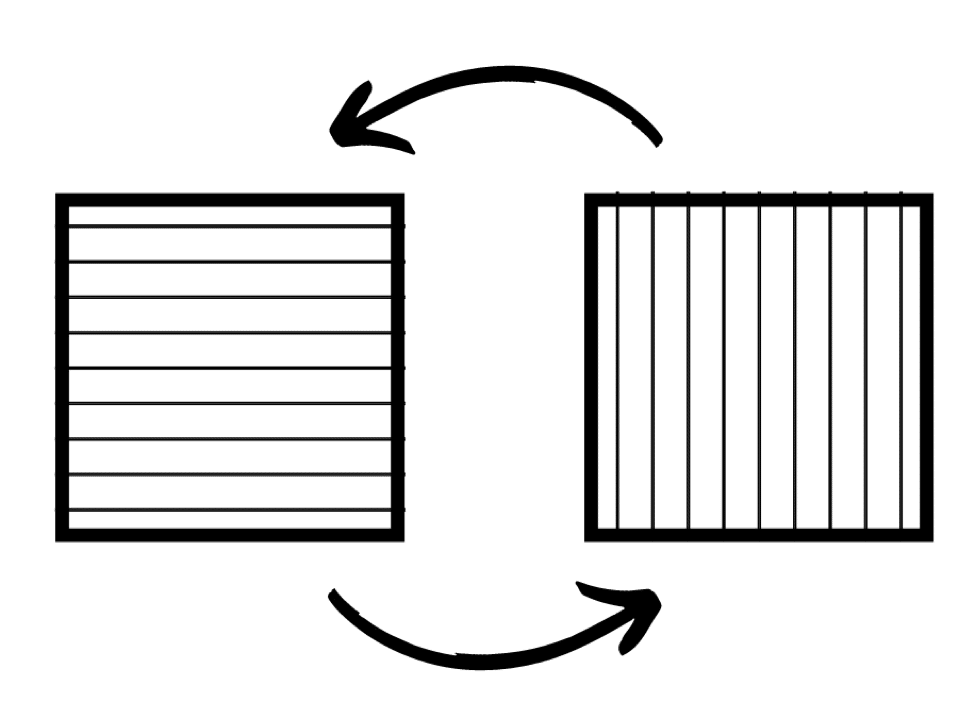

Think about the platonic idea. What if I have two competences (Fig. 4) (draws two squares) that both are working towards getting me to reliably achieve my goals, correct my own behavior. But these competencies could actually conflict with each other. (draws an arrow from the first square to the second square and another arrow from the second square to the first square) That would mean I would be the source of all the standards. Here are all the standards here (draws horizontal lines inside the first square). Here are all the standards here (draws vertical lines inside the second square). I am all—I'm the source for all the standards. But at the level of my competence, I can be generating error because these two competencies (indicates the two squares) can actually be in conflict with each other.

So one of the—the way you start to resolve this debate, and this has become a central idea in psychology and cognitive psychology and cognitive science is that we don't have a single competency. We have to have multiple competencies. And what that means is, and this is why, for example, assuming uncritically, uncritically assuming that you could reduce rationality and identify it with the single competence of syllogistic reasoning is just fundamentally wrong. It's not paying attention to the science.

Here's another issue. Cohen is assuming that your competence is full blown. It's finished, it's static. It's done. But, and I sort of slipped this in, notice the examples I used of small children. Their competence, for example, in English or whatever language they're speaking, I just happen to be speaking English that's why I'm using it. The little girl —the two and a half year old, her competence is not fully developed. She will come to have the standards as that competency fully develops. But as that competency is immature, she can be a source of error from her competence. So, we have to give up the assumption that our competence again is static. Why would it be? And your cognition is inherently dynamic and developmental. Again, assuming, Oh, no, this is just what it is, right? And assuming that there is a single thing we're pointing to when we point to rationality is a mistake. See, this way, Stanovich and West can say—and it's brilliant, right? It's brilliant—they can say Cohen's argument is fundamentally right but his conclusion, that specific conclusion is wrong, because the conclusion that the errors are only performance errors is only a conclusion based on the hidden premise, right, that the competence is single and static. You have multiple competencies and they're in ongoing development.

Okay. So that's—we've learned something very interesting. (erases the board) So what's happening is, and Stanovich is an advocate of this, right? You have dual processing models. (Fig. 5) (writes Dual processing) This is the idea that we have different ways of processing information. Think of how platonic this is, how much Plato was going, I told you this a long time ago, right? We have different styles. Ways of processing information and that are good for different kinds of problem solving and those, and neither one of these competencies can be exclusive or sufficient for us, but they have ultimately a complimentary relationship, a relationship I would argue of opponent processing. Stanovich has a different view. We'll come back to that a little bit later. Okay.

Finitary Predicament

The second person in the debate, and you've heard me mention him and you've heard me talk about him (writes Cherniak) with serious respect. And he is given serious respect by Stanovich and West. Cherniak has a much different approach to the rationality debate. He agrees with Stanovich and West that the difficulty is not at the level of our performance, it's at the level of our competence, but he has a different move to make. He has a move that you see, you saw me invoke last time. This is the Ought implies can. So this is the question whether or not, right, whether or not we're applying the right normative theory to people in these experiments when we're judging them to be irrational.

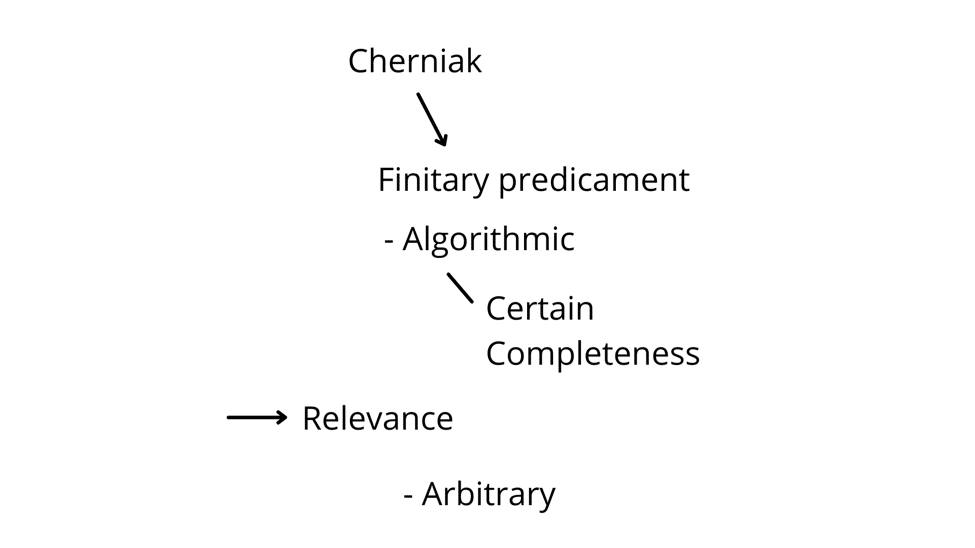

So Cherniak invokes something that you saw me invoke multiple times (draws an arrow pointing away from Cherniak) when we talked about relevance realization. We're in a finitary predicament. (Fig. 6a) (writes Finitary predicament below Cherniak) This is actually his term. Alright. We cannot, because it is combinatorially explosive derive all the implications, we cannot consider all of our assumptions. All of this stuff that we've talked about before we can not go back and recreate all of the ways in which we've represented something. This is combinatorially explosive. What we do, right? Do we just arbitrarily choose whatever implication we want? No.

So here's the point, right? We can't be algorithmic, right? (Fig. 6b) (writes Algorithmic below Finitary predicament) We can't use standards that work in terms of certainty and completeness (writes Certain Completeness below Algorithmic) because, for example, if I tried to be comprehensively deductively logical, then I would engage—I would fall very rapidly into combinatorial explosion for any problem that I'm trying to solve. And I would then have committed cognitive suicide. It cannot be a standard, a normative standard of rationality if trying to follow it would commit me to cognitive suicide, which would undermine any attempt to satisfy any of my goals.

You see what Cherniak is saying, right? You can't do this (underlines Algorithmic), but, of course, you don't just arbitrarily (writes Arbitrary below Algorithmic) choose whatever representation you want, choose whatever inference you want, choose to check whatever contradiction you want. So you can't check them all and you can't just arbitrarily—well, what's the answer?

Well, you saw the answer before and it's one that I—you choose—it's relevance. You pick the relevant implications, you check the relevant contradictions, you check which aspects of your representation you consider relevant, et cetera. You do relevance realization. And Stanovich is like, yeah, that's right. Like, we're not going to argue with that. And that's part of what I've been arguing throughout. There's this consensus on how central this ability is, at least it's an emerging consensus. Herbert Simon from Newell and Simon, you know, talks about bounded rationality that we can't be purely computational, algorithmic, et cetera, for all of the reasons we've already explored.

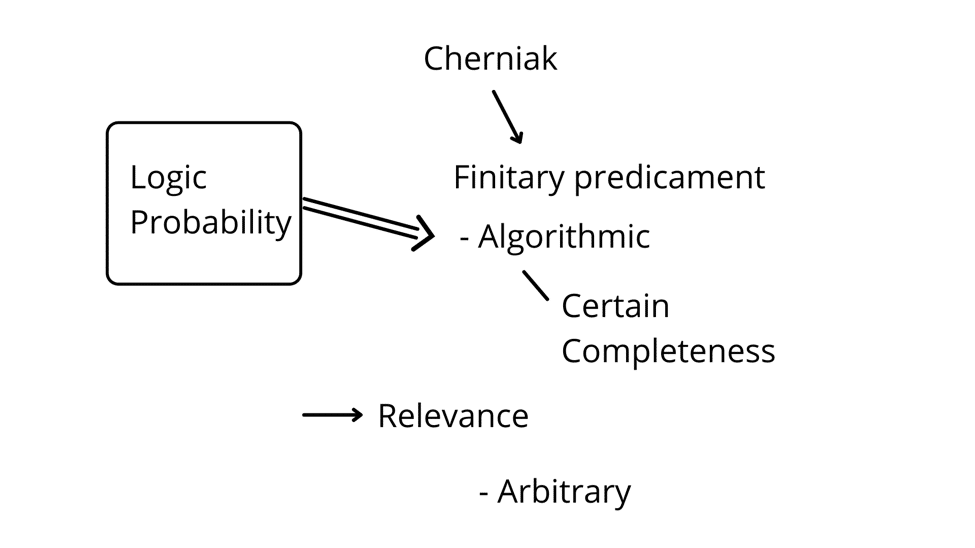

What Cherniak says is, but look what people are using in the experiments. They're using formal logic. (writes Logic) They're using formal probability theory (Fig. 6c). (writes Probability below Logic) They're using all these formal, purely algorithmic. (draws arrow from Logic Probability to Algorithmic) By the way, you say, Oh, but probability certainly doesn't work in certainty. Yes, it does. It gives you certainty about probabilities. That's what makes it a formal theory. That's why it has axioms and theorems, et cetera. Okay? Don't confuse properties of the theory with properties of what the theory is about. So what Cherniak says is the scientist in the experiment are using all these formal theories (encloses Logic Probability in a box and encircles Algorithmic - Certain Completeness) that can only be applied in very limited contexts. If I try to apply them comprehensively in my life—and that's where rationality matters, because rationality is an existential issue.—If I try to apply it (indicates Finitary predicament) comprehensively in my life from within the finitary predicament, I am doomed to fail. And then you're laying an Ought on me that I cannot possibly meet, which means you, scientists, have the wrong normative theory, because if you're laying a normative theory on me that I cannot possibly meet, that's evidence that it's the wrong normative theory.

That's Cherniak's argument, it's a powerful argument. It's a good argument. It's an argument I take very seriously. So does Stanovich and West. They go, yes. All of this is right. However, Cherniak is talking about—he thinks he's talking about one thing and he's actually talking about another. And this is such a clever response, and it's going to tell us something really, really important. Right? Okay.

First of all, this tells you that (indicates Logic Probability) you, again, here's another argument why you can't equate rationality with merely being logical, merely using probability theory, right? Does that mean I can be arbitrary (indicates Arbitrary) and ignore logic? No. It's the much more difficult—and notice how this starts to overlap with wisdom—it's a much more difficult issue of knowing when, where, and to what degree I should use logic and probability in a formal manner, et cetera.

Computational Limitations

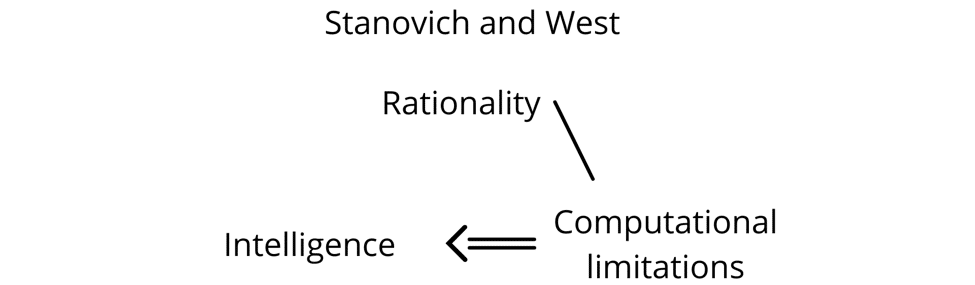

Okay. How does Stanovich and West reply? Again, I think it's brilliant. They say all of this is right, but it's not about rationality. (writes Rationality below Stanovich & West) All of this. All of this stuff. They tend to talk about it in term, they use the phrase, computational limitations. (Fig. 7a) (writes Computational limitations below Stanovich & West) They always describe it sort of negatively. Instead of positively, instead of relevance realization, although the two are interdefined, they always talk about it in terms of computational limitations. But they say all this stuff that Cherniak is talking about in terms of computational limitations is actually not about rationality. (draws an arrow from Computational limitations to Rationality) It's actually about intelligence. (draws an arrow from Computational limitation and writes Intelligence) This is going to be a brilliant move and what it's going to also show us is there is a—and I said this a while ago, there's a deep difference between being intelligent and being rational, in fact, Stanovich is going to argue that what makes you foolish is you're highly intelligent and highly irrational. And that is going to make sense of what we've already argued. That the very processes that make me adaptively intelligent are the very processes that also subject me to self-deception.

How does he do this? Well, he basically argues. And you saw an analogous argument. How did they do this? And how does Stanovich do it elsewhere? They basically argue, ah, when we test for people's ability to zero in on relevant information, how can we test to see how well people deal with computational limitations? (encircles computational limitations) So what Stanovich basically argues is—and again, myself and Leo Ferraro argued this in a convergent fashion, other people, right? Basically what we're testing when we're testing people's intelligence is we're testing their capacity to deal with computational limitations. And this lines up again—also, you've heard me mention this, that measures of intelligence correlate with measures of working memory and blah, blah, blah. I've given you all that argument. Stanovich is giving you an additional argument here. He's saying, okay. So we understand intelligence as the capacity to deal with computational limitations (indicates Computational limitations) that's a negative way of putting it. I would say it's the capacity to do relevance realization, (indicates Relevance) but let's keep going. And then we have a way of, therefore, measuring people's capacity to deal with computational limitations.

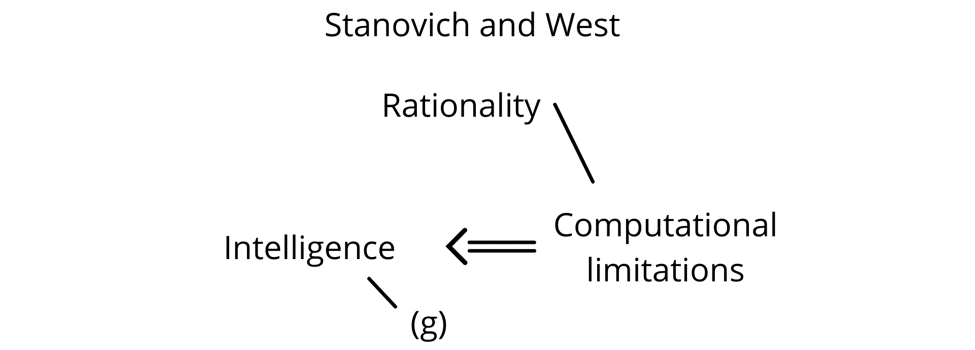

So Cherniak is saying people fail in the experiment because of computational limitations, they're in the finitary predicament. And we have a way of measuring how well people can deal with computational limitations: that's intelligence. (encircles Intelligence) We have a way of measuring g (Fig. 7b) (writes (g) below Intelligence) —reliable, robust way of measuring g.

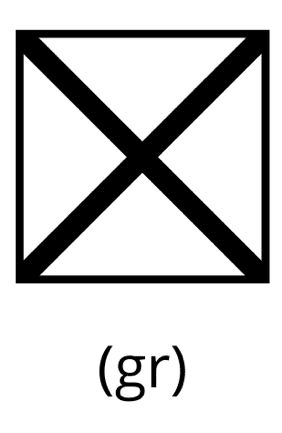

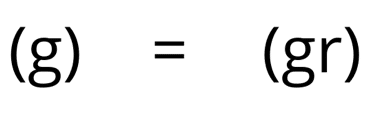

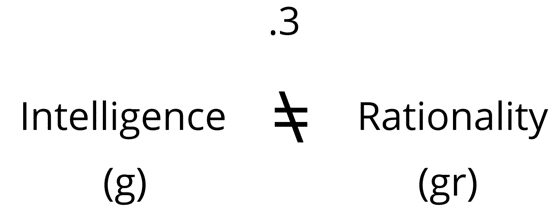

So now, notice what we can do, again, so brilliant (indicates Stanovich & West). (writes (g)) We have reliable ways of measuring g. Remember what Stanovich and West was showed with answers to Cohen that all of the reasoning tasks (draws a square with an x inside) also form upon a strong manifold. They don't label anything, but I'm going to call it r. There's right—sorry, gr. (Fig. 8a) (writes gr under the square) There's this general factor of reasoning because the reasoning tasks (indicates gr) form a strong positive manifold. Okay. So what we can do is we can measure the GFR, right? (writes (gr) beside (g) and erases the square labeled with gr) And now we can do something very, very, very basic. If what's happening in the experiment is a measure of rationality and rationality is equivalent to dealing with combinatorial explosion, computational limitations, then these two (Fig. 8b) (writes = between (g) and (gr)) should approach parody. Intelligence and rationality should be identical, right?

So notice what's going on here. If Cherniak is right then rationality and intelligence would be identical and there would be a strong relationship between how intelligent you are and how well you do on these experiments. And this is again where—good science: reliable, robust, well-replicated lots of covered decades. The relationship here (indicates Fig. 8b) is at best, 0.3 (writes .3 below (g) = (gr)). So, you know, the correlation goes from zero to one (writes 0-1) where zero is no correlation, and one is very strong correlation. 0.3 (encircles .3). Intelligence. What this clearly shows is that intelligence is necessary, but nowhere near sufficient for being rational.

Okay. So here's two things that are insufficient for making you rational, just being very intelligent (indicates Intelligence) and just being able to use logic (indicates Logic Probability). The science is actually clear on this. So notice how a lot of the ways our culture has tried to understand rationality are now coming into question. We—oh, well, rationality is equivalent Fig.9) (writes Rationality =)—think of Descartes. Now you see why Descartes is wrong of logicality (writes Logicality). Well, that turns out to be false (draws a slash through =). Oh, well rationality is the same thing as being really smart, really intelligent (writes Intelligence = beside Rationality), right? Nope. That turns out to be false (draws a slash through =). Oh, what is that then? (writes a question mark below Rationality) What is that then? So now we're starting to do good science, right? We're starting to get away from common sense. We're starting to have some humility we're starting to—it's now a real problem.

Well, what is it? What is it? And it gives us a way of talking about what we've been talking about—the very processes that make you intelligent (indicates Finitary predicament) can actually cause you to be irrational. There is no contradiction in saying you're highly intelligent and highly irrational, not at all.

So now we have to ask ourselves, well, what's the missing piece? So let's remember that. We've got an important question that we need to ask and answer. What's the missing piece for rationality? What's missing? Something else is going on and that missing piece is going to tell us quite a bit I think about the overlap between wisdom and rationality.

Now there's a third argument (erases the board) and it's an important argument. 'Cause it's also gonna connect to the issue of understanding, which is again, a crucial feature of wisdom. Now, Stanovich and West talk about this argument, but they don't cite an individual who actually came up with the argument explicitly. I think it's just because I don't think there's any fraud. I think they just didn't read it because the person was—the person I'm going to talk about is Smedslund. And the article is from the Scandinavian Journal of Psychology in 1970. (writes Smedslund) So, you know, it's impossible to read everyone, everywhere at all times. Again, we're in the finitary predicament. But Smedslund pointed out something that Stanovich and West do take seriously and because Smedslund makes an explicit and clear argument for it, we should pay attention to what he says.

Fallacy And Misunderstanding

He says, well, there's a difficulty with these. So this is the third response. We've had Cohen, we've had Cherniak and now a response that I will attribute to Smedslund. Stanovich and West don't, but they should, but again, no crime on their part. Okay. Smedslund says, well, there's a difficulty with interpreting the experiments. Again, that's the issue. Always the issue of interpretation and you can't do an experiment to decide the interpretation because then you have to do—then you have to interpret that experiment. You can't experiment your way out of interpretation. Interpretation is always going to be needed in science. And that means theoretical debate is always going to be needed.

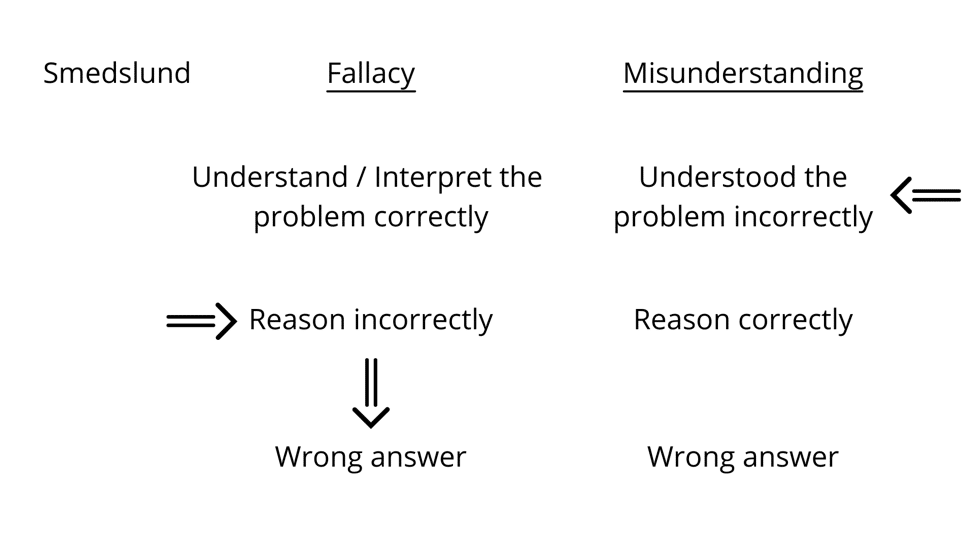

Okay. So back to the theoretical debate, Smedslund says, now, interpreting these experiments relies on a distinction between a fallacy and a misunderstanding (Fig. 10) (writes Fallacy and Misunderstanding). Because there's two ways in which I can give the wrong answer. One is I interpret the problem correctly (writes Interpret the problem correctly below Fallacy). I understand it (writes Understand). But then I reason incorrectly, (writes Reason incorrectly) and that's why I get (draws an arrow from Reason incorrectly) the wrong answer (writes Wrong answer below the arrow). So the fault in a fallacy, fallacious reasoning, is, this is where the error comes in (draws an arrow pointing to Reason incorrectly). The poor reasoning. I reason incorrectly.

But there's another way in which I can give the wrong answer (writes Wrong answer beside Wrong answer). You get to the same conclusion with the wrong answer. Well, what is it? I actually reason correctly (writes Reason correctly above Wrong answer) in a normative fashion, but I've understood the problem incorrectly (writes Understood the problem incorrectly below Misunderstanding). And that's a misunderstanding. Somebody misunderstands us, the error comes in (draws an arrow pointing to Understanding the problem incorrectly) because they've interpreted the problem. They've understood the problem incorrectly. But once you give them that incorrect interpretation, there's nothing wrong with their reasoning. There's nothing wrong with their reasoning.

Okay, great. So there's two places we can get—there's two equally good explanations for why we produce the wrong answers. One is we reason incorrectly and that's a fallacy because we've got the correct interpretation. The other one is we are reasoning correctly. There's nothing wrong with our reasoning. But we've understood the problem incorrectly and that's a misunderstanding. Right? And that's—okay, so this distinction is really crucial (draws a double-headed arrow between Fallacy and Misunderstanding) because this distinction is really key—crucial. Keeping these apart is really crucial because the—if we want to conclude that people are irrational, we have to be attributing to them (draws an arrow pointing to Fallacy) fallacious cognition, not pure—sorry, not—sorry. Oh, wow. Where did pure come?—We have to attribute to them fallacious cognition, not some kind of distortion in the communication that they've misunderstood us. One of the ways in which people typically often avoid self-criticism, avoiding the possibility that they might have reasoned incorrectly, is to always claim that they have merely been misunderstood. Been misunderstood. Look for that in somebody. Look for somebody who never says, "Ah, my argument is wrong. I did it wrong." Look for somebody who always says, "No, no, I've been misunderstood." Because they're basically trading on this in an equivocal fashion. They're bullshitting you in a way. Okay. So—now, sometimes they should say I've been misunderstood. Totally. Sometimes, but sometimes they should say I reasoned wrong.

Okay. Let's get back to this. Okay. So far so good. Right? But then Smedslund said, but this is difficult because these things (indicates Fallacy and Misunderstanding) aren't independent the way we need them to be. What do I mean? The attribution of fallacy or the attribution of misunderstanding are not independent the way we need them to be in order to cleanly interpret the experimental results as showing that people are largely engaging in fallacious reasoning. Well, why?

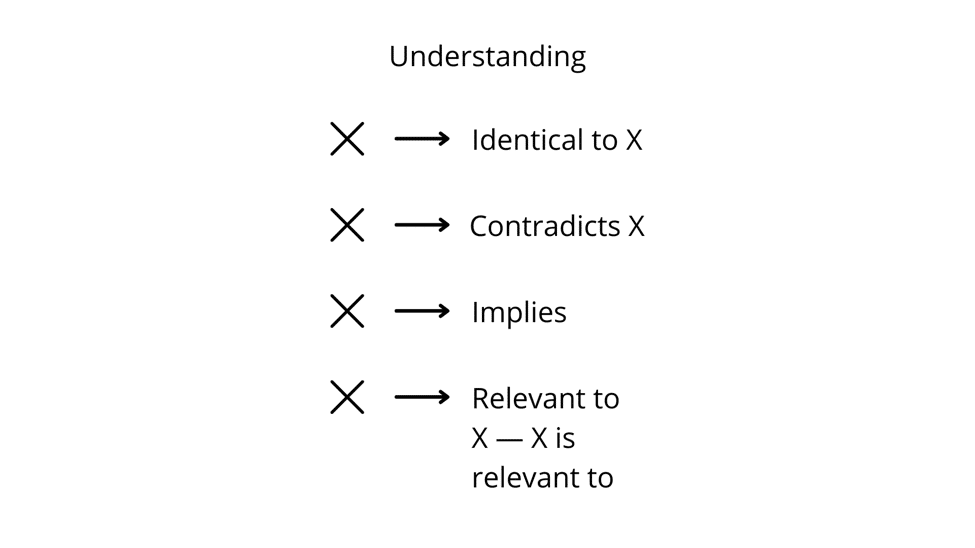

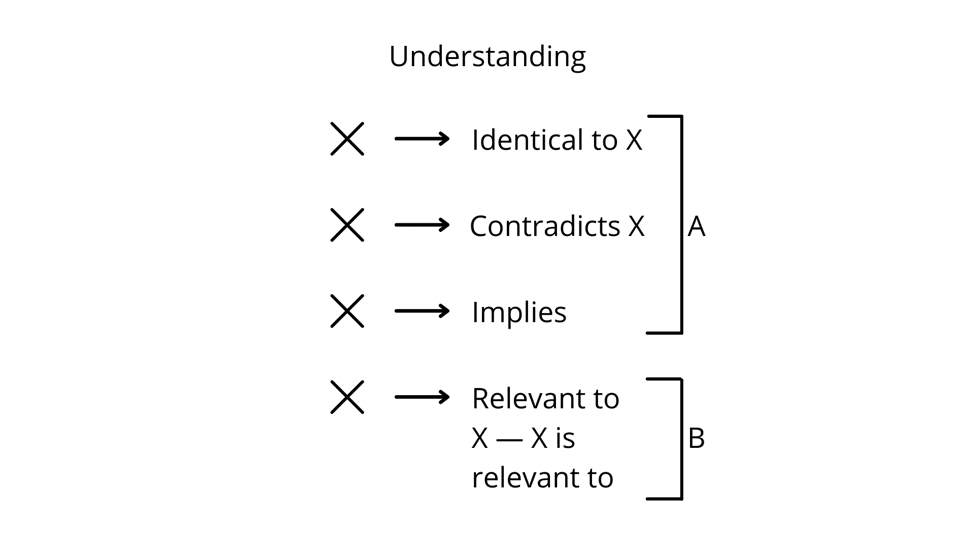

So Smedslund does something and of course, I think it's preliminary, but we'll have to come back to it—which is you have a preliminary account of understanding (writes Understanding). And he basically says, well, what is it to understand something? And he says, well, to understand X (Fig. 11a) (writes X), we ask people to give us something that's identical to X (writes Identical). Well, let's use arrows here (draws an arrow from X to Identical). To X. We ask us to give us something that contradicts X (writes X, draws an arrow pointing from X, writes Contradicts X). We ask us to give things that X implies (writes X, draws an arrow from X, writes Implies). And these are all, of course, related because identity is a kind of implication. Contradiction is a failure of implication. And then we also ask them to give us things that are relevant to X. Yeah, there it is again. Uh huh. I would also add, by the way, because when you look at further research on understanding. People talk about not only what is relevant to X (writes What is relevant to X) but also what is X relevant to (writes X is relevant to), and I don't think Smedslund would object to that. Here's the—here's relevance again, of course.

Okay. So what's the problem? Ah, now put this one aside and Smedslund just sort of puts it aside in his argument. And, of course, that's something I'm not going to, sort of, let sit by. He puts aside this (draws a bracket to the right of Relevant to X-X is relevant to; labeled A in Fig. 11b). He says, well, ignoring that (indicates the A in Fig. 11b), look at these three (draws a brack from Identical to X, Contradicts X, Implies; labeled B in Fig. 11b). The way we determine if somebody has understood us is we determine if they have drawn the identities (indicates Identical to X) we would draw, drawn the contradictions (indicates Contradicts X) we have drawn, drawn the implications we've drawn (indicates Implies). So somebody understands us if they reason the way that we do.

So what Smedslund says, this is what the scientists are assuming. The scientists are assuming that the participants in the experiment have understood the problem—sorry (indicates Interpret the problem correctly in Fig. 10). I've understood the problem correctly and then reason incorrectly (indicates Reason incorrectly), but notice how this is a pragmatic contradiction, because if they've understood the point correctly, then they reason the way the scientist does (indicates Fig. 11b) in this very difficult task of interpreting a problem. And then, but then they reason in the way the scientist doesn’t when they're actually trying to solve the problem. That's problematic. In fact, couldn't I say this: couldn't I say, the fact that the participants in the experiment are consistently producing the wrong answer is good evidence that they are misunderstanding the problem? People are reliably misunderstanding these problems.

"Well, that can't be because the scientists made them. Scientists can't be making mistakes. Scientists can't misunderstand commu—" What are you attributing to scientists? God-like authority? No, stick with the argument here. Right? You can, you could, you can conclude that they're making the fallacy, but it's sort of like, you have to say for some reason at this really difficult problem of interpreting what I'm saying, they're reasoning very correctly. And then when they go to solve the problem, they're reasoning poorly, or you can say, they're reasoning correctly, but they've misunderstood the problem. But that also means that their reasoning poorly, or maybe, or maybe I've misrepresented or miscommunicated, the problem. See, now it's much more problematic.

Normativity On Construal

Okay. So two things to note here, two important things to note. First of all, we got to come back to this (draws an arrow from Wrong answer to Relevant to X - X is relevant to) because here's—there's an opening here. Stanovich and West because they haven't read Smedslund and because they don't have this so clearly explicated (indicates Fig. 11b), they can't sort of pick up on that. So I'm not criticizing them for not seeing this. But they do come up with a very important point. And this go—and this is convergent with their argument against Cohen.

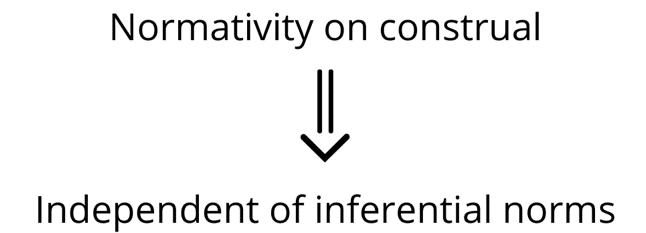

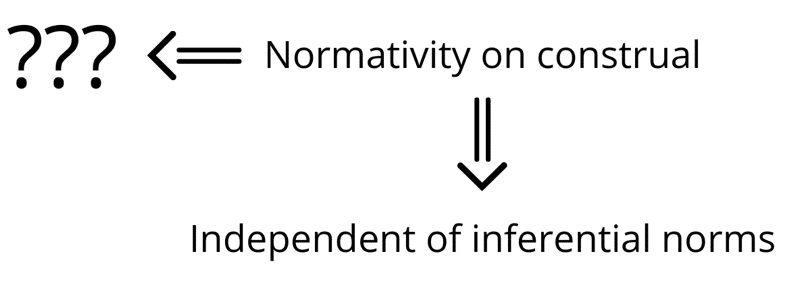

They argue for this. They argue, in order to break this impasse, we need a normativity on construal (writes Normativity on construal). We need a normativity on how people interpret, make sense of, size up the situation of the problem. That's what construal means. Basically, we need a normativity on how they formulate the problem, and this has to be an independent normativity (draws an arrow from Normativity on Construal and writes Independent of inferential norms); independent of what? Independent of inferential norms. Right. If I try to use inference as my stand—good inference as my standard for doing this (indicates Fig. 12a), I'll fall into this circle. I have to be evaluate—I have to be able to evaluate construal independently of evaluating how people make inferences. That's the only way I'm going to break out of this.

But that's okay, because that means... Stanovich doesn't take this as deeply as he should, but that means that there's a non-inferential aspect to rationality that is central. There's an aspect of rationality that has to do with understanding, with construal that is non-inferential in nature. And that, of course, points back to this (encircles Relevant to X - X is relevant to) because relevance is pre-inferential, the way you formulate your problems—remember that?—has to do with relevance realization. And that is something that is pre-inferential in nature.

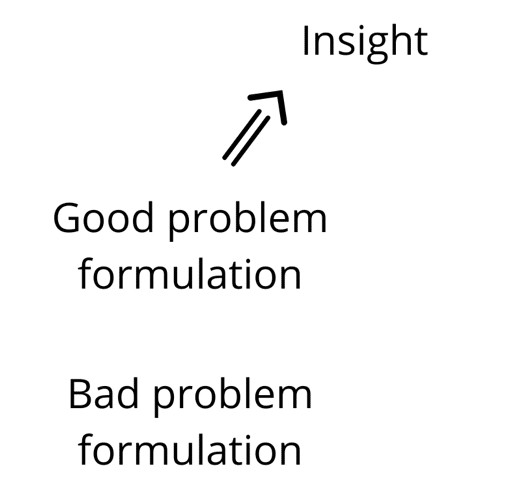

So we can actually put this together very cleanly. I would argue. We can say—now, see what Stanovich and West say is, okay, we need this normativity on construal (indicates Normativity on construal). It has to be independent of our inferential normativity. And then they go, "Oh, we don't know what this is (Fig. 12b) (draws an arrow from Normativity on construal and writes ???). What could it be? What that normativity of construal will look like?" it's "Oh, right." And it's, like, granted.

But here's a proposal, right? I think it's a proposal that's, sort of, it's clearly presented to us from a lot of the arguments we've already considered. Right? We do have a normativity on construal. We have standards of what a good problem formulation is (Fig. 13) (writes Good problem formulation) versus a bad problem formulation (writes Bad formulation below Good problem formulation). Where do we study that normativity in psychology? Well, we study it in insight problem solving (writes Insight beside Good problem formulation). We know what a bad problem formulation is. A bad problem formulation is one that puts you into a combinatorially explosive search space. A bad problem formulation is one that does not turn your ill-defined problem into a well-defined problem. A bad problem formulation is one in which you are not paying careful attention to how salience is misleading you.

Hmm. So that's telling us something really interesting, right? That there is, in addition to inference being crucial to being rational, insight is crucial to being rational too, because if what we mean by insight is somebody who is good at formulating problems, avoiding combinatorial explosion, avoiding ill-definededness, avoiding salience misdirection, then being insightful is going to be central to being rational. It's not going to be something that comes up out of the irrational aspects of the psyche. It comes in from the non-inferential, but why should we be identifying—nobody is here—identifying rationality with just a pure logical normativity on inference.

So what I propose to you is that we need to understand the role of both insight and inference in rationality, and that's much more problematic. But we need that because we need to integrate rationality and understanding together in a integrated account and notice how now more and more rationality and wisdom are starting to overlap for us in a serious way, because if I now get rationality and understanding, inference and insight enmeshed together then, of course, I'm starting to talk more and more—and having to happen in a systematic and reliable way—I'm starting to talk more and more about the ways when we talk about how people are wise. If we give up thinking of rationality as being like Mr. Spock or Mr. Data, and we give up, you know, the idea that being rational is just being really smart, then we start to get into the problematic notion of rationality and we need the idea of multiple competencies. We need the distinction between being logical and being rational, being intelligent, being rational. And we have to understand that there's an important component of rationality that is non-influential in nature. It has to do with a normativity on construal, the generation of insight, not a normativity of argumentation and the generation of inference. Right? And that's important. That's really important (erases the board).

Active Open-Mindedness

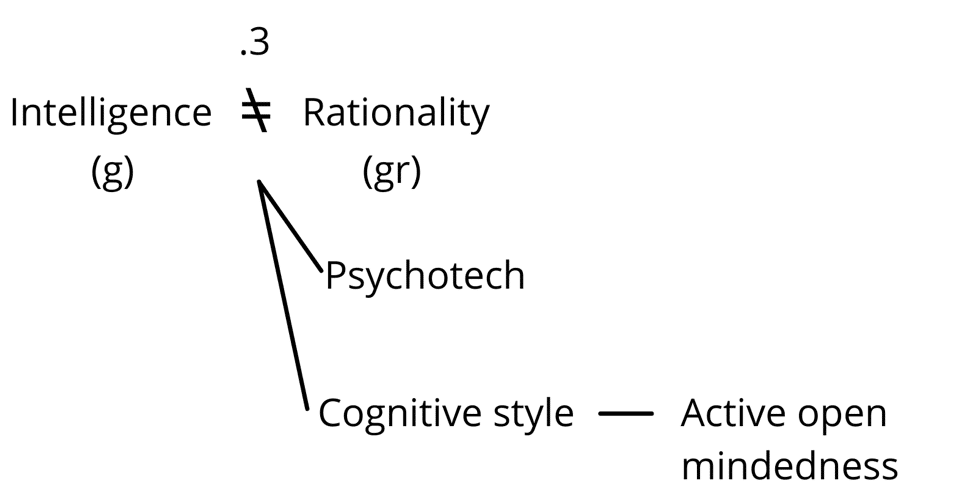

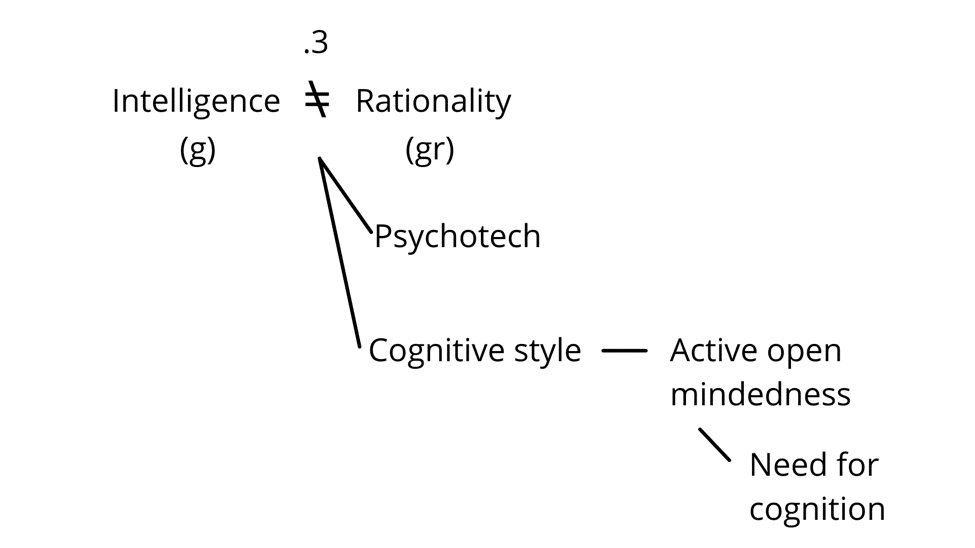

So the issue of construal is acknowledged, but not, in any way, resolved by Stanovich. So it's not going to play, although it should, given his own arguments, it's not going to play a significant role in his theory of rationality. What is that theory? What does it look like? What is the missing piece, according to Stanovich? So we said, right, that intelligence (Fig. 14a) (writes (g) and writes Intelligence above (g)) is not predictive or an only weakly predictive of rationality (writes (gr) beside (g) and writes Rationality above (gr)). These are not equivalent (writes ≠ between Intelligence and Rationality). And along the way we got yet another argument for intelligence being relevance realization. Okay. That's all good. What is the missing piece then? The relationship here is only 0.3 (writes .3 above ≠). What accounts for most of the variance then, as a scientist would say?

So Stanovich argues very clearly for what he calls a cognitive style. Well, this, this term is equip—it's a bit equivocal? It's used in slightly different ways in psychology for different things. And he also invokes the notion of a bad mindware, inappropriate psychotech. So that's also in there. So there's sort of cognitive styles in psychotech that can both be part of the missing variance. So one part here is the psychotech you're using (Fig. 14b) (writes Psychotech below (g) and (gr)) You can have poor, what he calls mindware, which is like software. He's picking up on the psychotech idea here, right?

And then the other, and this is what often gets given more priority because it counts for a lot is an appropriate cognitive style (writes Cognitive style below Psychotech). The difference between these (indicates Psychotech and Cognitive style) are not as clear. I think Stanovich seems to think they are, so we'll have to come back to that when we come back to the relationship between psychotechnology and wisdom. So what's the cognitive style? So a cognitive style is an—is something you can learn, at least as Stanovich is using it. And it's to learn a set of sensitivities and skills, notice the procedurality and the perspectival in here, but it's implicit, it's in the background. But what is the cognitive style that's most predictive of doing well on the reasoning tasks. He gets this from Jonathan Baron. This is the notion of active open-mindedness (writes Active Open-mindedness beside Cognitive Style). And when you see this, you're going to see a lot of Stoicism here, and this overlaps a lot with the cognitive behavioral therapy that is derived from Stoicism. And again, that's convergent, that's not by design or deliberate. And that tells you something, something crucial is being seen here.

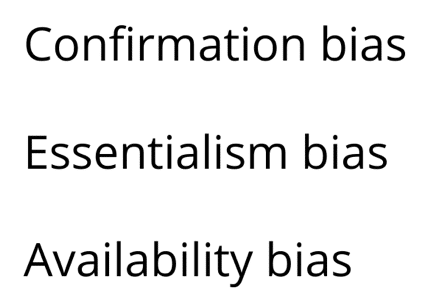

What is active open-mindedness? So active open-mindedness is to train yourself, to look for these patterns of self-deception, to look for biases. So here's a bias you've heard me mention, you've heard me mention some of these (Fig. 15). The confirmation bias (writes Confirmation bias) or the essentialism bias (writes Essentialism bias). Or the representationalism or the availability—misusing the availability heuristic (writes Availability bias). Notice that a bias is just a heuristic misused. We've talked about this. So this is (indicates Confirmation bias), I tend to only look for information that confirms my beliefs, right? This is (indicates Essentialism bias), I tend to treat any category as a pointing to an essence shared by all the members. Maybe we should give up an essentialism of sacredness or at least in the terms of its content. I've already suggested that to you. The availability heuristic (indicates Availability bias) is the availability biases; I judge somethings probability by how easily I can remember it or imagine it happening. We've talked about all of these there's many of these.

So what do I do? First of all, I have to do the science. I learn about all of these (encircles Confirmation bias, Essentialism bias, Availability bias). So this comes from Baron (writes Baron). I want to point out something that Stanovich doesn't say as clearly as Baron does. So what I do is I learn about these (indicates Fig. 15) and I sensitize myself. I sensitize myself and this is like a virtue cause I have to care about the process, not just the results. I sensitize myself to looking for these biases in my day to day cognition.

And then I actively counteract them (underlines Active open-mindedness). I actively say, no, no, no, I'm doing confirmation bias. I need to look for potential information that will disconfirm it. And here's where you can now begin to also give up the individualistic assumption of competence. Part of the way in which I can be rationally competent is I can ask you to help me overcome my confirmation bias, because it's very hard for me to look for information that disconfirms my beliefs. It's much easier for you. And then if I practice with you a notch, I can start to internalize you and I can start to get better at looking for my own instances where I fall prey to the confirmation bias. So I now actively counteract those. That's sort of where Stanovich leaves it, Baron points out, but you don't overdo this. Because if you overdo this, you will start to choke on the tsunami of combinatorial explosion that will overwhelm you. So again, you have to do this and you have to do this to the right degree. And that becomes much more nebulous. And again, we're starting to shade over into wisdom, right?

So what you should then ask is what predicts—if being intelligent doesn't predict— if intelligence predicted rationality then being intelligent would predict how well you've cultivated active open-mindedness. But of course it doesn't. So what is it about people that predicts how well they will cultivate active open-mindedness and this is the degree to which people have a need for cognition (Fig. 16) (writes Need for cognition below Active open-mindedness). This is people who problematize things. They create problems. They look for problems. They go out and on their own try to learn.

I would add to that. There's two aspects to need for cognition. There is a curiosity in which I need to have more information so that I can manipulate things more effectively. And that's important people that are, in that sense, more curious and want to solve problems, not just gather facts, but solve problems because that's what needs for cognition points to. That's important. But also think about how important wonder is, right? How much it opens you up to putting into question your entire worldview, your deeper sense of identity? Deep need for cognition. And that's relevant to do because rationality is ultimately an existential issue, not just a theoretical inferential logical issue.

So what we're going to need to do is to come back and look more about at Stanovich's account of rationality. Some criticisms of it. And then on the basis of that, because we've already—overlapping with it so much, take a look at some of the key theories of the nature of wisdom and try to draw that together into a viable account of wisdom, which we can then integrate with the account of enlightenment.

Thank you very much for your time and attention.

- END -

Episode 41 Notes:

To keep this site running, we are an Amazon Associate where we earn from qualifying purchases

Keith E. Stanovich is Emeritus Professor of Applied Psychology and Human Development, University of Toronto and former Canada Research Chair of Applied Cognitive Science.

Article Mentioned: Individual Differences in Reasoning: Implications for the Rationality Debate

Laurence Jonathan Cohen, FBA, usually cited as L. Jonathan Cohen, was a British philosopher.

Christopher Cherniak is an American neuroscientist, a member of the University of Maryland Philosophy Department. Cherniak’s research trajectory started in theory of knowledge and led into computational neuroanatomy and genomics.

Herbert Alexander Simon was an American economist, political scientist and cognitive psychologist, whose primary research interest was decision-making within organizations and is best known for the theories of "bounded rationality" and "satisficing".

Bounded rationality is the idea that rationality is limited when individuals make decisions.

Book Mentioned: Models of Bounded Rationality: Empirically Grounded Economic Reason Volume 3 – Buy Here

Jonathan Miller Baron is a Professor Emeritus of Psychology at the University of Pennsylvania in the science of decision-making.

Confirmation bias is the tendency to search for, interpret, favor, and recall information in a way that confirms or supports one's prior beliefs, prejudices or values.

The availability heuristic, also known as availability bias, is a mental shortcut that relies on immediate examples that come to a given person's mind when evaluating a specific topic, concept, method or decision.

Book Mentioned: Biased – Buy Here

Book Mentioned: The 25 Cognitive Biases: Understanding Human Psychology, Decision Making & How to Not Fall Victim to Them – Buy Here

Book Mentioned: Cognitive Biases : Your Guide To Rationality: A pocket reference book – Buy Here