Ep. 28 - Awakening from the Meaning Crisis - Convergence To Relevance Realization

(Sectioning and transcripts made by MeaningCrisis.co)

A Kind Donation

Transcript

Welcome back to Awakening from the Meaning Crisis. So we have been looking at the Cognitive science of intelligence and we've been looking at the seminal work of Newell and Simon, and we've seen how they are trying to create a plausible construct of intelligence. They're drawing many different ideas together into this idea of intelligence as the capacity to be a General Problem Solver. And then they’re doing a fantastic job of applying the Naturalistic Imperative, which helps us to avoid the Homuncular Fallacy because we’re trying to analyse, formalise and mechanise our explanation of intelligence, explaining the mind, ultimately, in a non-circular fashion by explaining it in non-mental terms. And this will also hopefully give us a way of re-situating the mind within the scientific worldview. We saw that at the core of their construct was the realization via the formalization and the attempted mechanization of the combinatorial explosive nature of the problem space and how crucial relevance realization is and how somehow you zero in on the relevant information. They proposed a solution to this that has far reaching implications for our understanding of meaning cultivation and of rationality. They propose the distinction between heuristic and algorithmic processing and the fact that most of our processing has to be heuristic in nature. It can't pursue certainty. It can't be algorithmic. It can't be Cartesian in in that fashion. And that also means that our cognition is susceptible to bias. The very processes that make us intelligently adaptive, help us to ignore the combinatorial explosive amount of options and information available to us are the ones that also prejudice us and bias us so that we can become self deceptively misled. This was a powerful…/ They deserve to be seminal figures in that they exemplify how we should be trying to do Cognitive science. And they exemplify the power of the Naturalistic Imperative.

But, there were serious shortcomings in Newell and Simon's work. They themselves, and this is something we should remember — even as scientists, the scientific method is designed to try and, it's a psycho-technology designed to try and help us deal with our proclivities towards self-deception — they fell prey to a Cognitive heuristic that biased them. They were making use of the essentialist heuristic which is crucial to our adoptive intelligence. It helps us find those classes that do share an essence and therefore allow us to make powerful predictions and generalizations. Of course, the problem with essentialism is precisely [that] it is a heuristic. It is adoptive. We are tempted to overuse it, and that will make us miss-see that many categories do not possess an essence. Like Wittgenstein famously pointed out: the category of game or chair or table. Newell and Simon thought that all problems were essentially the same. And because of that, how you formulate a problem is a rather trivial matter. Because of that, they were blinded to the fact that all problems are not essentially the same, that there are essential differences between types of problems and therefore problem formulation is actually very important. This is the distinction between well-defined problems and ill-define problems, and I made the point that most real world problems are ill-defined problems. What's missing in ill-defined problems is precisely the Relevance Realization that you get through a good problem formulation.

We then went into the work in which Simon himself participated, the work of Kaplan and Simon, to show that this self-same Relevance Realization, through problem formulation, is at work in addressing combinatorial explosion. We took a look at the problem of the mutilated chess board in that if you formulate it as a covering strategy, you will get into a combinatorial explosive search. Whereas If you formulate it as a parody strategy, if you make salient the fact that the two removed pieces are the same colour, then the solution becomes obvious to you and very simple to do. Problem formulation helps you avoid combinatorial explosion and helps you deal with ill-definededness and this process by which you move from a poor problem formulation to a good problem formulation is the process of insight. And this is why, we have seen throughout, insight is so crucial to you being a rational cognitive agent. And that means that, in addition to logic being essential for our rationality, those psycho-technologies that enhance our capacity for insight are also crucially important; indispensable.

We know that in insight Relevance Realization is recursively self-organizing, restructuring itself in a dynamic fashion. So insight, of course, it's not only important for changing ill-definededness — so this is what problem formulation is doing, or as I'll later call it problem framing (writes P.F. - Problem Framing as a heading on the board) — it's doing this (writes ‘ill-defined —> well defined’ on the board). It's also doing this: it’s helping me avoid combinatorial explosion (writes ‘avoid C.E.’ on the board). But it's also doing something else, something that we talked about and saw already before with the nine dot problem. It's helping you to overcome the way in which your Relevance Realization machinery is making the wrong things salient and obvious for you. So insight is also the way in which this process is self corrective (writes ‘self corrective’ on the board) in which the problem formulation process is self-corrected because you can mislead yourself, be misdirected.

So insight deals with converting ill-defined problems into well-defined problems by doing problem framing, problem formulation, or doing reframing when needed. It helps us avoid combinatorial explosion by doing problem formulation or reframing as with the person who shifted from a covering strategy to a parody strategy in the mutilated chess board. Or it also helps us correct how we limit, in-appropriately, our attempts to solve a problem by what we consider salient or relevant. And it allows us to reformulate, reframe and break out of the way we have boxed our cognition and our consciousness in. So insight is crucial in the cultivation of wisdom.

I want to go on now… What we're seeing is [-], we're doing this thing where we're understanding intelligence and we're seeing many things just converging on the idea of being a general problem solver (draws several converging lines). And then where we're seeing many instances already within that, once we get this notion of trying to come up with a general problem solver, we see that that in turn.../ many different things are feeding into this issue of Relevance Realization as what makes you capable of being a General Problem Solver. So we have many things feeding into this (GPS) and then we're analyzing (has written RR at the convergent point, with GPS above it, with it’s own lines in and out and refers to a relationship between the two with a line representing ‘analysing’). So this (GPS) has tremendous potential — I'm trying to show you a plausibility structure — many converging lines of how we measure, investigate and talk about intelligence lead to understanding intelligence as a General Problem Solver, and then we're starting to see that many of the lines of investigation are converging [to show that] what makes you generally intelligent is your capacity for Relevance Realization. I want to continue this convergence. I want to make it quite powerful for you.

Categorising

So presumably one of the things that contributes, as I mentioned, to your capacity for being a general problem solver, Is your capacity for categorizing things. I already alluded to that when I just discussed this issue last time. Your ability to categorize things massively increases your ability to deal with the world. If I couldn't categorize things, if I couldn't see these both as markers (holds up two markers), I would have to treat each one as a raw individual that I'm encountering for the first time, kind of like we do when we meet people and we treat them with proper nouns. So this would be Tom, and then this would be Agnes and meeting Tom doesn't tell me anything about what Agnes is going to be like. And we talked about this when we talked about categorical perception. But if I can categorize them together, I can make predictions about how any member of this category will behave. It massively speeds up my ability to make predictions, to abstract important and potentially relevant information. It allows me to communicate. I can communicate all of that categorical information with a common now: "marker". Your ability to categorize is central to your ability to be intelligent.

So what is a category? A category isn't just any set of things. A category is a set of things that you sense belong together. Now we noted last time that your sense of things belonging together isn't necessarily because they share an essence. That's the common mistake, right? How is it that we categorize things? How does this basic ability central to our intelligence operate? I'm not going to try and fully explain that, I don't know anyone that can do that right now! All I need to do is show you, again, is how this issue of relevance realization is at the centre. The standard explanation, the one that works from common sense, is the one you see in Sesame street! You give the child [four] things (places out three markers and a board cleaner), “here are three things… three of these things, they're kind of the same. One of these things is not like the others, three of these things are kind of the same…”. And you have to pick out the one, right? And so these go together, this one doesn’t. These are categorized as markers… That's the Sesame street explanation. What's the explanation? I noticed that these are similar, right? I noticed that this one is different. I mentally grouped together the things that are similar. I keep the things that are different mentally apart. And that's how I form categories! Isn't that obvious?

Differentiating Between Logical And Psychological Similarity

Well again, explaining how it becomes obvious to you and how you make the correct properties salient is the crucial thing. Why? Well, this was a point made famous by the philosopher, Nelson Goodman. What a great name, Goodman! Nelson pointed out that we're often — well, I'm going to use our language, I think this is fair — but we're often equivocating when we invoke ‘similarity’ and how obvious it is, between a psychological sense and a logical sense, and in that sense we're deceiving ourselves that we're offering an explanation. So what do I mean? Well, what does similarity mean in a logical sense? Well, remember the Sesame street example: "kind of the same". Similarity is Partial Identity: kind of the same. Okay, what does Partial Identity mean? Well, you share properties, you share features, and the more features you share, the more identical you are, the more similar you are. There you go! Okay, well, that's pretty clear! Well, once you agree with that, Nelson Goodman is going to say, “well, now you have a problem because any two objects are, logically, overwhelmingly similar!”.

Because pick any two objects, I would say a bison and a lawn-mower! All I have to do is list lots, I have to pick properties that they share in common. Well, they're both found in North America. Neither one was found in North America 300 million years ago. Both contain carbon. Both can kill you if not properly treated. Both have an odour. Both weigh less than a ton. Neither one makes a particularly good weapon. In fact, the number of things that I can say truly that are shared by [a bison] and [a lawnmower] is indefinitely large. It's combinatorially explosive. It goes on and on and on and on. And this is Goodman’s point: they share many, many indefinitely large number of properties. Now what, I imagine, you're saying is, "yeah, that's all true..." — I didn't say anything false! Notice how truth and relevance aren't the same thing; I didn't say anything false — but what you're saying to me is, “yeah, but those aren't the important properties, you're picking trivial and..." notice what you're doing. You're telling me that I haven't zeroed in on the relevant properties, the ones that are obvious to you, the ones that stand out to you as salient.

So what you're now doing is you're moving from a logical to a psychological account of similarity. For psychological similarity is not any true comparison, but finding the relevant comparisons. And the thing about that is that doesn't seem to be stable - Barsalou pointed this out. So I'm going to give you a set of things; Is it a category? Okay. So it's your wife, pets, works of art, gasoline, explosive material. Is that a category? Works of art, your children, your spouse, gasoline, explosive material. Is that a category? And you go, “no! They don't share enough in common!”. Now here's what I say to you: “There's a fire!”. “Oh, right!! All those things belong together now!”. Because I care about my wife, I care about my kids because fire can kill them [and] pets! And explosive stuff and flammable stuff is dangerous. Now it forms a category! In one context, not a category and another context, a very tight and important category!

Now the logical sharing has not changed. What’s shared psychologically is what properties or features you consider relevant for making the comparison. Out of all of what is logically shared, you zero in [-] on the relevant features for comparison. You do the same thing when you're deciding that two things are different because any two objects, any objects you think are really the same also have an indefinitely large number of differences. And when you are holding things as different, because you have zeroed in… here [for example] (indicating the pens and cleaner), shape and use are relevant differences. So at the core of your ability to form and use categories is your capacity, again, for zeroing in on relevant information.

A Robot With A Problem - The Proliferation Of Side Effects

Okay. Now, one thing that people sometimes say to me when I start talking this way is they say, “Oh, you know, Darwin, Darwin, Darwin, Darwin…” And we'll talk about Darwin again! And Darwin's very important and we'll talk about his work, but what they mean by that, it's like you're doing all this abstract, you're, you know…/ Concrete, survival situations: I’ve just got to make a machine that can survive. Right? And that's just obvious, right? It avoids this and it finds that. Well, first of all, is it? So one of the things a machine has to do, for example, Is avoid danger. “Danger will Robinson!” right?! Danger. What set of features do all dangerous things share? Don't tell me synonyms for danger. I mean, holes are dangerous. Bees are dangerous. Poison is dangerous. Knives are dangerous. Lack of food is dangerous. What do all of those share? And don't say, “well, they lead to the damaging of your tissue”. That's what danger [is]. Those are synonyms for danger. What I’m talking about are causes of danger. What do they share? How do you zero in on them? And you still say, “well, I sort of get that, but still, you know, just moving around the world, finding your food!?” Okay. Well, let's do that. Let's try and make a machine that's going to find [food]. It's going to deal with that very basic problem. It's going to be a cognitive agent looking for its food.

Now, because it's an electronic machine - it’s a robot - we’re going to have it look for batteries. This is an example from Daniel Dennett. So here's my robot (draws on the board), it’s mobile, It's got wheels. It's got this appendage for grabbing stuff. It's got all these wonderful sensors. It's got lots of computational power duggaduggadugga…! So we know what we need to do: In order to make it an agent, a cognitive agent — that's what we've been talking about from the very beginning — it has to be different from merely something that generates behaviour. Everything generates behaviour! This behaves in a certain way, this behaves in a certain way, this behaves in a certain way (indicating various random things around him). What makes you an agent — I mean, this isn't all that makes you an agent, this is a philosophically complex problem — but the crucial thing about what makes you an agent is the following: You can determine the consequences of your behavior — I'm using that term very broadly — you can determine the consequences of your behavior and change your behavior accordingly. So this ability to determine the consequences, the effects of your behavior is crucial to being an agent. So we build a machine that can do that: it can determine the consequences of its behavior.

(Drawing) So here's a wagon. It has a nice handle and on it is a nice juicy battery. Now, the robot will try and do what you and I do. And this is also a Darwinian thing because for, most creatures, you have to not only find food, you have to avoid being food. And so you don't just eat your food where you first find it. Even powerful predators, like leopards, move their food to another location because it will get stolen, they could get preyed upon, et cetera… You don't eat your food where you first come across it. Fast food restaurants are somewhat of an anomaly, but [for example] when you walk into the supermarket, you just don't start eating. You try and take your food to a more safe place. You try and share your food with other people because that's a socially valuable thing to do. That's why, when you're eating something you don't like, you give it, "eew, this tastes horrible! Taste it..." you want to share, right? You want to use food as a way of sharing experience, bonding together.

So the robot is programmed to take its food, the battery to a safe place, and then consume it. Well, that seems just so simple, right? That's so simple. Well, we have to make this a problem because we were talking about being a problem solver! And on this wagon is a lit bomb. The bomb is lit, which means there's a very high probability [that] the fuse will burn down and the bomb will go off! And we put the robot in this situation. Now what does the robot do? The robot pulls the handle because it has determined that a consequence, an effect of pulling the handle is to bring the battery along. So it pulls the wagon and it brings the battery along because that's the intended effect. That's the consequence that it has determined is relevant to its goal. But of course the bomb goes off and destroys the robot and we think, "Oh, what did we do wrong?" What did we do wrong? There's something missing. And then we realized, "ah, you know what? We made the robot only look for the intended effects of its behavior. We didn't have the robot check side effects!”. And that's really important, right? Every year this happens; people fail to check side effects. They go into a situation in which they know flammable gas is diffuse, but it's dark! And so they strike a match because they want the intended effect of making light. But it has the unintended effect of creating heat, which sets off the gas and explodes and harms or kills them.

So we say, "ah, we have to have the machine not only check the intended effects. It has to check the side effects of its own behavior." Okay, so what we're going to do is we're going to give it more computational power, right? It's going [have] (draws) more sensors, way more sensors, way more comp[utational power]. And we're also going to put a black box inside this, like they do in an airplane so that we can see what's going on inside the robot. And then we're going to put it into this situation (as above), because this is a great test situation because once we solve this simple Darwinian problem, we'll have a basically intelligent machine. So we put it in this situation. And it comes up to the wagon and then… nothing happens! It doesn't do anything! And we go, "WHAT???" The bomb goes off! Why didn't it just move away from the wagon or why didn't it try to lift the battery off? Well, we take a look and we find that the robot is doing what we programmed it to! It's trying to determine all of the possible side effects. So it's determining that if it pulls the handle, that will make a squeaking noise. If it pulls the handle, the front left wheel will go through 30 degrees of arc, the front right wheel will go through 30 degrees of arc. The back wheel, same way. Back left wheel, back right, there'll be a site wobbling and shifting in the wagon. The grass underneath the wheels is going to be indented. The position of the wagon with respect to Mars is being altered… Do you see what the issue is here? The number of side effects is combinatorially explosive. Oh crap!!!

So what do we do? Well, we think "we'll give..." — and this is something that I'm going to argue later [that] we can't do: we come up with a recipe, a definition of relevance. Nobody knows what that is. I'm going to, in fact, argue later that that's actually impossible and that's going to be crucial for understanding our response to the Meaning Crisis. But let's give them the possibility: we have a definition of relevance and what we'll do is we'll have the robot determine which side effects are relevant or not. Oh, so that's great. So we add that new ability here (draws into the new robot). We give it some extra computational power. We put it in here (same situation as above again) and it goes up to the wagon and the battery and the bomb goes off and it doesn't [do anything], it just sits there calculating! What's going on? And what we notice [is], we look inside and it's making two lists! And it's, (mocks creating the two lists) here's the wheel turning, that's irrelevant and it's judging it, “That is irrelevant”. Oh, here's the change in Mar “it's irrelevant”. And it's making a list and this list is going and it's correctly labelling each one of these is irrelevant, but the list keeps going and going and going!

See, this is going to sound like a Zen Koan: you have to ignore the information, not even check it.../ See Relevance Realization isn't the application of a definition. It is somehow intelligently ignoring all the irrelevance and somehow zeroing in [on], making the relevant stuff salient, standing out so that the actions that you should do are relevant to you, are obvious to you. This is the problem of the Proliferation of Side Effects in behavior, in action. This is called the Frame Problem. Now there's different aspects of the frame problem. One was a technical aspect, a logical aspect of doing computational programming and Shanahan, I think, is correct that he and others have solved that technical problem. But when Shanahan himself argues is once you've solve that technical version of the frame problem, this deeper problem remains. And of course he calls this deeper problem, this deeper version of the frame problem, the Relevance Problem. He happens to think that consciousness might be the way in which we deal with this problem. We'll talk about that later. Many people are converging on the idea that consciousness and related ideas like working memory have to do with our ability to zero in on relevant information. But let's keep going…

Communication Problems

Because what about communication? Isn't that central to being a general problem solver? You bet! Especially [when] most of my intelligence is my ability to coordinate my behavior with myself and with others. Communication is vital to this. We see this even in creatures that don't have linguistic communication; social communication makes many species behave in a more sophisticated fashion. And I already mentioned to you, there's a relationship between how intelligent an individual is and how social the species is. It's not an algorithm. There seem to be important exceptions like octopus, the octopus, but in general, communication is crucial to being an intelligent, cognitive agent. Let's try and use linguistic communication as our example, because that way we can also bring in the linguistics that's in cognitive science. So the point is when you're using language to communicate, you're involved with a very particular problem. This was made really clear by the work of Grice, HP Grice. He pointed out that you always are conveying much beyond what you're saying. It's much more than what you're saying. It always has to be! And that communication depends on you being able to convey much more than you say.

Now, why is that? Because I have to depend on you to derive the implications — that's a logical thing — and then, what he also called implicature — which is not a directly logical thing — in order for me to convey above and beyond what I'm saying. So [for example] I drive up in my car, I put my window down and I say, "excuse me..." there's a person on the street, "I'm out of gas!" And the person comes over and says, "Oh, there's a gas station at the corner". And I go, "thank you", and drive away! So notice — lets go through this carefully — so I rolled down the window and I just shout out, "EXCUSE ME!". Okay? Now what would I actually need to be saying to capture everything that I'm conveying? I would have to say.../ I'm shouting this word EXCUSE ME in the hope that anybody who hears it understands that the ME refers to the speaker and that by saying “excuse me” I'm actually requesting that you give me your attention understanding, of course, that I'm not demanding that you'll give me your attention for like an hour or three hours or 17 days, but for some minimal amount of time that's somehow relevant for a problem that I'm going to pose that's not too onerous.

"I" — and again, when I'm saying “I”, I mean, this person making the noises who is actually the same as the one referred to by this other word, “ME” (in ‘excuse me’) — “I’m out of gas”. And, of course, I don't mean ME or I, the speaker, I mean the vehicle I'm actually in! I'm not asking you to make me more flatulent! I'm asking for you to help me find gasoline for my car! And I'm actually referring to gasoline by this short term ‘gas’. And by saying this, I know you understand that my car isn't completely out of gasoline. There's enough in it that I can drive, you know, some relatively close distance to find a source of gasoline.

The other person, "Oh!”. [Just] by uttering this otherwise meaningless term, I'm indicating that I accept the deal that we have here, that I'm going to give you a bit of my attention, and I understand that it's not going to be too long, too onerous. I can make a statement, seemingly out of the blue, that you will know how to connect to what you actually want, which has gasoline for your car, that's not completely out of gas. I will just say the statement, "there is a gasoline station at the corner!" You will figure out that that means that you can drive to it. I'm talking about a nearby corner. Somehow relevantly similar to the amount of gasoline [left in the car], like this isn't a corner halfway across the continent! There's a gasoline station [which] will distribute gasoline for your car. It's not for giving helium to blimps. It's not a little model of a gas station. It's not a gas station that's closed and not has not been in business for 10 years! It's a gasoline station that will accept Canadian currency or credit. It won't demand your firstborn or fruits from your field! And you know how all of that's going on? Because if any of that is violated, you either find it funny or you get angry. If you say, “excuse me, I'm out of gas” and the person comes up and blows some helium into your car. You don't go, "Oh, thank you! That's that's, that's what I wanted! I wanted some gas, helium. Yeah!!!” It's ridiculous! If you drive to the corner and there's a gas station that's been out of business for 10 years, you go, “What? What's going on? What's wrong with that person?”.

Always conveying way more than you're saying. Now, notice something else. Notice I tried to explicate what was [said], I gave you a whole bunch of sentences to try and explicate what I was conveying. But you know what? Each one of these sentences is also conveying more than it was saying! And if I was trying to unpack what [they] said, what [they] were conveying and what they say, I would have to generate all of their sentences and so on and so on. And you see what this explodes into: You can't say everything you want to convey! You rely on people reading between the lines. By the way, that is actually what this word means! At least one of the etymologies of “intelligence” is “Inter-ledger”, which means to read between the lines. So what did Grice say we do? Well, what we do is we follow a bunch of maxims. We assume that when we're trying to communicate, there's some basic level of cooperation — I don't mean social cooperation, just communicative cooperation — and we assume that people are following some maxims.

So you're at a party, and you hear me say [-] you asked me, "well, how many kids do you have?", and I say, "Oh, I have one. I have one kid", "Oh, okay." And then later on I'm talking and you overhear me -- somebody asked me the same [question] -- " how many kids do you have?", "Oh, I have two. I have two sons." And, "What?", you come up to me and say, "what's wrong with you? Why did you lie?", "What? I didn't lie. If I have two kids, I necessarily have one child. I didn't say anything false saying I have one kid!" And you'll just say "what an asshole!". Because I didn't provide you with the relevant amount of information. I didn't give you the information you needed in order to try and pick up on what I was conveying. You spoke the truth, the logical truth. Or I did in this example. But I didn't speak it in such a way — this is, again, why you can't be perfectly logical — I didn't speak it in such a way that I aided you in determining what the relevant conveyance is.

The Four Maxims Of Conveyance In Communication

So Grice said we follow four maxims. (Proceeds to write these on the board) We assume the person is trying to convey (1) The Truth. And then (2) a maxim Quantity; they're trying to give us the right amount of information. This is actually often called the maxim of Quality (rubs out Truth and replaces it with Quality), it has to do with truth though. And then there's a maximum of (3) Manner. And then there's a maximum of (4) Relevance. So this is basically, we assume that people are trying to tell the truth (1). They're trying to give us the right amount of information (2). They're trying to put it in the kind of format that's most helpful to us in getting what's conveyed beyond what's said (3), and they're giving us relevant information (4). There it is again, Oh, look!! there's the word: Relevance! . Then Sperber and Wilson come along writing a very important book, which I'll talk about later and I have criticisms of, but the book is entitled "Relevance". And what's interesting is they're proposing this — not just as a linguistic phenomenon, but a more general cognitive phenomenon: They argue that all of these (1,2 & 3) actually reduced to the one maximum: be relevant.

Okay, so manner: [-] what is it to be helpful to somebody? Well, it's present[ing] the information in a way that's helpful [to] them. Well, what you do is you try and make salient and obvious what is relevant. Okay. That was easy! Quantity: give the relevant amount of information. What about this? And you say, "ah, John I got ya! You can't reduce this one. Because this is truth. And you have been hitting me over the head since the beginning of this series that truth and relevance are not the same thing!" You're right. So what does Sperber and Wilson do about that? Well they do something really interesting, they say, "we don't actually demand that people speak the truth, because if we did, we're screwed because most of our beliefs are false! What are we actually asking people to do? We're actually asking people to be honest or sincere. That's not the same thing." You're allowed to say what you believe to be true, not what is true. Okay. "So what?" you say. Well, that means the maximum is actually 'be sincere'.

What does sincere mean? "Well, convey what's in your mind", "everything that's in your mind? Everything that's going [on]?”. So when you asked me, "How many kids do you have?" I've got all this stuff going on in my mind about this marriage is failing. What am I going to do to take care of these kids, I love this kid, but it's okay... all of that and (gestures a head exploding from so much related content) oh man, have you been trapped with somebody that's getting drunk and they talk like that at a party? It’s horrible! You're trapped! If you say one [thing][-] "do you have any kids?" And you're trapped for three hours! So that's not what we mean. We don't mean “tell me everything that's in your mind right now. Convey it all to me, John, give it to me all!” That's not what we mean. What do we mean? We mean convey what is relevant to the conversation or context. Out of all of the possible implications and implicatures, zeroing in on those that you might think are relevant to me, our conversation and the context. So that also reduces to relevance.

The Relevance Problem

(*proceeds to work through detailing this on the board)

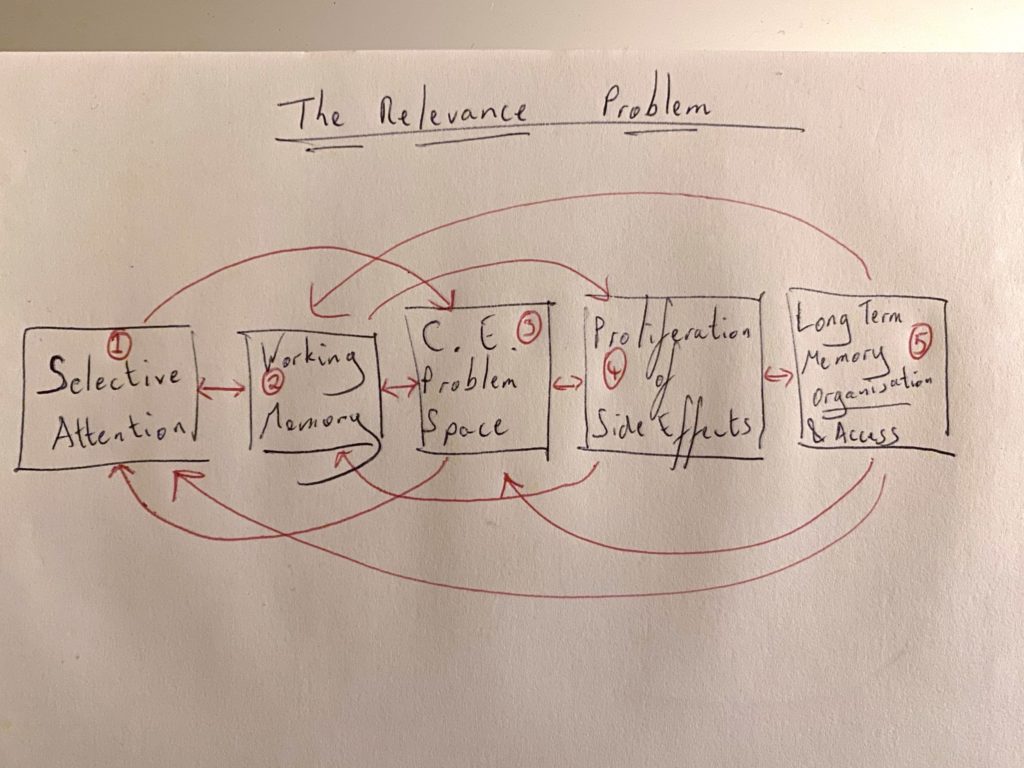

So at the key of your ability to communicate is your ability to realize relevant information. Notice what I'm doing here. I'm doing this huge convergence argument again and again, and again, what's at the core of your intelligence (draws converging lines like in the construct of Synoptic Integration)? What's at the core of your intelligence again and again, and again, is your capacity for relevance realization (writes R.R. where the lines converge to). It's even more complicated than this! (Wipes board clean.) [-] Remember this: all of the information available in the environment, overwhelming combinatorially explosive! You have to selectively attend to some of it. So this is doing relevance realization: Selective Attention (1). And then you have to decide how to hold in Working Memory (2), what's going to be important for you. Lynn Hasher's excellent work showing that working memory is about trying to screen off what's relevant or irrelevant information. You're using this (Working Memory) in your problem solving. You're using this (Working Memory) and here is where you are trying to deal with the Combinatorial Explosion in the Problem Space (3), all that stuff we talked about. Also interacting with the Proliferation of Side Effects (4), like we saw with the robot and the battery when you try to act. So you're trying to select [something], what do I hold in mind? How do I move through the problem space? Once I start acting, what side effects do I pay attention to? Which ones do I not pay attention to? And all of that has to do with, out of all the information in my Long Term Memory, how do I organize it? How do I categorize it? How do I improve my ability to access it? Longterm Memory Organisation and access (5) is dependent on your ability to zero in on relevant information.

And this of course feeds back to here (5 —> 3). This feeds back to here (5 —> 2), This feeds back to here (5 —> 1),

These are interacting (2 & 4), these are interacting (1 & 3).

This is the Relevance Problem. That (what he’s detailed on the board)! That's the problem of trying to determine what's relevant. It's the core of what makes you intelligent?

Now, why does that matter? What I'm trying to show you is how deep and profound this construct is. This idea of Relevance Realization is at the core of what it is to be intelligent. And we know that this isn't just cold calculation. Your Relevance Realization machinery has to do with all the stuff we've been talking about: salience, obviousness, it's about what motivates you, what arouses your energy, what attracts your attention? Relevance Realization is deeply involving. It's at the guts of your intelligence, your salience landscaping, your problem solving. Okay, so what do I want to do? What I want to do is the following: I want to propose to you that we can continue to do this (draws the convergence argument diagram - 5 lines converging to RR). I can show you how all of this is... I could do more! About how it's all converging on this. Then I want to do two things. I want to try and show how we might be able to analyse formalize and mechanize this (writes these down from in the convergence diagram) in a way that could help to coordinate how our consciousness, our cognition our attention, access to our longterm memory... how all that's working. Then what do I want I do with this? I want to try and show you how we can use Relevance Realization in a multi-tap fashion (draws lines diverging out of RR in a similar way to the Synoptic Integration construct), to try and get a purchase on these things we have been talking about in the historical analysis.

Can we use Relevance Realization and how it's dynamically self-organizing in this complex [construct] (indicates the Relevance Problem construct on the board)… It's self-organizing within each one of these (1 to 5 individually). Remember I showed you how attention is bottom up and top down at the same time — all of these are powerfully self-organizing — and how the whole thing is self-organized? We know it's so organizing in insight. Can I use Relevance Realization to explain things that are crucial to wisdom, to self-transcendence, to spirituality, to meaning? That's exactly what I'm going to do. I'm going to use this construct (convergence construct) once I've tried to show you how it could potentially be grounded (down through analysing, formalising and mechanising), building the synoptic integration across the levels and then do this kind (the multi-apt, diverging lines of the construct) of integration.

And think about why this makes at least initial plausible sense. Relevance Realization is crucial to insight and insight is central to wisdom. Relevance Realization — and you're getting a hint of it — seems to be crucial to consciousness and attention and altering your state of consciousness. We've already seen it can be crucial two Wisdom and Meaning Making. And that would make sense. Look, isn't it sort of central that what makes somebody wise is exactly their capacity to zero in on the relevant information in a situation? To take an ill-defined, messy situation and zero in? To pay attention to the relevant side effects, the relevant consequences? To get you to pay attention to what are the important features to remember the right similar situations from the past? Right? Well you say, "okay, I sort of see that. Well, what about the self-transcendence?" Well, we already see that this is a self-organizing, self-correcting process. We already know that there's an element of insight. The very machinery that makes you capable of insight is the machinery that helps you overcome the biases, helps you to overcome the self deception. And it helps you solve problems that you couldn't solve before. We talked about this with Systematic Insight. "Okay... so consciousness, insight, wisdom, but what about meaning? Come on! Like, where's all that?”.

Well, here's the proposal, (taps the Synoptic Integration construct of Relevance Realisation on the board) right? That what we were talking about when we talked about meaning in terms of the three orders -- the normal logical, the narrative and the normative -- would, yes… were connections that afforded wisdom, self-transcendence very much... "But what connections?" Well, the connections that were lost in the meaning crisis! The connections between mind and body, the connections between mind and world, the connections between mind and mind, that connection of the mind to itself! These are all the things that are called into question: the fragmentation of the mind itself. [-] And we saw how this, all throughout, had to do with, again, the relationship between salience and truth, what we find relevant in terms of how it's salient or obvious to us and how that connects up to reality. And how it connects — remember Plato — [it] connects parts of us together in the right way, the optimal way. What if what we're talking about when we're using this metaphor of meaning is we're talking about how we find things relevant to us, to each other, parts of ourselves relevant to each other, how we're relevant to the world, how the world is relevant to us? All this language of connection is not the language of largely causal connection. It's the language of establishing relations of relevance between things.

Perhaps there's a deep reason why manipulating Relevance Realization affords self-transcendence and wisdom and insight precisely because Relevance Realization is the ability to make the connections that are at the core of meaning, those connections that are quintessentially being threatened by the Meaning crisis. That would mean if we get an understanding of the machinery of this (Synoptic Integration Construct of RR), we would have a way of generating new psycho-technologies, re-designing, reappropriating older psycho-technologies and coordinating them systematically in order to regenerate, [be] regenerative of these fraying connections. Relegitimate and afford the cultivation of wisdom, self-transcendence, connectedness to ourselves and to each other and to the world.

And that's in fact, what I want to explore with you and help explain to you in our next session together on Awakening from the Meaning Crisis. Thank you very much for your time and attention.

Episode 28 Notes

To keep this site running, we are an Amazon Associate where we earn from qualifying purchases

Barsalou

Lawrence W. Barsalou is an American psychologist and a cognitive scientist, currently working at the University of Glasgow. At the University of Glasgow, he is a professor of psychology, performing research in the Institute of Neuroscience and Psychology.

Dennett

Daniel Clement Dennett III is an American philosopher, writer, and cognitive scientist whose research centers on the philosophy of mind, philosophy of science, and philosophy of biology, particularly as those fields relate to evolutionary biology and cognitive science.

Shanahan

Murray Patrick Shanahan is a Professor of Cognitive Robotics at Imperial College London, in the Department of Computing, and a senior scientist at DeepMind. He researches artificial intelligence, robotics, and cognitive science.

Learn more about him in this website

HP Grice

Herbert Paul Grice, usually publishing under the name H. P. Grice, H. Paul Grice, or Paul Grice, was a British philosopher of language, whose work on meaning has influenced the philosophical study of semantics. He is known for his theory of implicature.

Sperber and Wilson

Relevance Theory is a framework for understanding utterance interpretation first proposed by Dan Sperber and Deirdre Wilson and used within cognitive linguistics and pragmatics.

Relevance

Relevance, first published in 1986, was named as one of the most important and influential books of the decade in the Times Higher Educational Supplement

Book mentioned – Relevance – Buy Here

Lynn Hasher

Lynn Hasher is a cognitive scientist known for research on attention, working memory, and inhibitory control. Hasher is Professor Emerita in the Psychology Department at the University of Toronto and Senior Scientist at the Rotman Research Institute at Baycrest Centre for Geriatric Care

Other helpful resources about this episode:

Notes on Bevry

Additional Notes on Bevry